This weekend's plot shows the measurement of the branching fractions for neutral B and Bs mesons decays into muon pairs:

This is not exactly a new thing. LHCb and CMS separately announced evidence for the B0s→μ+μ- decay in summer 2013, and a preliminary combination of their results appeared a few days after. The plot above comes from the recent paper where a more careful combination is performed, though the results change only slightly.

A neutral B meson is a bound state of an anti-b-quark and a d-quark (B0) or an s-quark (B0s), while for an anti-B meson the quark and the antiquark are interchanged. Their decays to μ+μ- are interesting because they are very suppressed in the standard model. At the parton level, the quark-antiquark pair annihilates into a μ+μ- pair. As for all flavor changing neutral current processes, the lowest order diagrams mediating these decays occur at the 1-loop level. On top of that, there is the helicity suppression by the small muon mass, and the CKM suppression by the small Vts (B0s) or Vtd (B0) matrix elements. Beyond the standard model one or more of these suppression factors may be absent and the contribution could in principle exceed that of the standard model even if the new particles are as heavy as ~100 TeV. We already know this is not the case for the B0s→μ+μ- decay. The measured branching fraction (2.8 ± 0.7)x10^-9 agrees within 1 sigma with the standard model prediction (3.66±0.23)x10^-9. Further reducing the experimental error will be very interesting in view of observed anomalies in some other b-to-s-quark transitions. On the other hand, the room for new physics to show up is limited, as the theoretical error may soon become a showstopper.

Situation is a bit different for the B0→μ+μ- decay, where there is still relatively more room for new physics. This process has been less in the spotlight. This is partly due to a theoretical prejudice: in most popular new physics models it is impossible to generate a large effect in this decay without generating a corresponding excess in B0s→μ+μ-. Moreover, B0→μ+μ- is experimentally more difficult: the branching fraction predicted by the standard model is (1.06±0.09)x10^-10, which is 30 times smaller than that for B0s→μ+μ-. In fact, a 3σ evidence for the B0→μ+μ- decay appears only after combining LHCb and CMS data. More interestingly, the measured branching fraction, (3.9±1.4)x10^-10, is some 2 sigma above the standard model value. Of course, this is most likely a statistical fluke, but nevertheless it will be interesting to see an update once the 13-TeV LHC run collects enough data.

Sunday, 21 December 2014

Saturday, 13 December 2014

Planck: what's new

Slides from the recent Planck collaboration meeting are now available online. One can find there preliminary results that include an input from Planck's measurements of the polarization of the Cosmic Microwave Background (some which were previously available via the legendary press release in French). I already wrote about the new important limits on dark matter annihilation cross section. Here I picked up a few more things that may be of interest for a garden variety particle physicist.

To summarize, Planck continues to deliver disappointing results, and there's still more to follow ;)

- ΛCDM.

Here is a summary of Planck's best fit parameters of the standard cosmological model with and without the polarization info:

Note that the temperature-only numbers are slightly different than in the 2013 release, because of improved calibration and foreground cleaning. Frustratingly, ΛCDM remains solid. The polarization data do not change the overall picture, but they shrink some errors considerably. The Hubble parameter remains at a low value; the previous tension with Ia supernovae observations seems to be partly resolved and blamed on systematics on the supernovae side. For the large scale structure fans, the parameter σ8 characterizing matter fluctuations today remains at a high value, in some tension with weak lensing and cluster counts. - Neff.

There are also better limits on deviations from ΛCDM. One interesting result is the new improved constraint on the effective number of neutrinos, Neff in short. The way this result is presented may be confusing. We know perfectly well there are exactly 3 light active (interacting via weak force) neutrinos; this has been established in the 90s at the LEP collider, and Planck has little to add in this respect. Heavy neutrinos, whether active or sterile, would not show in this measurement at all. For light sterile neutrinos, Neff implies an upper bound on the mixing angle with the active ones. The real importance of Neff lies in that it counts any light particles (other than photons) contributing to the energy density of the universe at the time of CMB decoupling. Outside the standard model neutrinos, other theorized particles could contribute any real positive number to Neff, depending on their temperature and spin. A few years ago there have been consistent hints of Neff much larger 3, which would imply physics beyond the standard model. Alas, Planck has shot down these claims. The latest number combining Planck and Baryon Acoustic Oscillations is Neff =3.04±0.18, spot on 3.046 expected from the standard model neutrinos. This represents an important constraint on any new physics model with very light (less than eV) particles. - Σmν.

The limit on the sum of the neutrino masses keeps improving and gets into a really interesting regime. Recall that, from oscillation experiments, we can extract the neutrino mass differences: Δm32 ≈ 0.05 eV and Δm12≈0.009 eV up to a sign, but we don't know their absolute masses. Planck and others have already excluded the possibility that all 3 neutrinos have approximately the same mass. Now they are not far from probing the so-called inverted hierarchy, where two neutrinos have approximately the same mass and the 3rd is much lighter, in which case Σmν ≈ 0.1 eV. Planck and Baryon Acoustic Oscillations set the limit Σmν < 0.16 eV at 95% CL, however this result is not strongly advertised because it is sensitive to the value of the Hubble parameter. Including non-Planck measurements leads to a weaker, more conservative limit Σmν < 0.23 eV, the same as quoted in the 2013 release.

- CνB.

For dessert, something cool. So far we could observe the cosmic neutrino background only through its contribution to the energy density of radiation in the early universe. This affects observables that can be inferred from the CMB acoustic peaks, such as the Hubble expansion rate or the time of matter-radiation equality. Planck, for the first time, probes the properties of the CνB. Namely, it measures the effective sound speed ceff and viscosity cvis parameters, which affect the growth of perturbations in the CνB. Free-streaming particles like the neutrinos should have ceff^2 = cvis^2 = 1/3, while Planck measures ceff^2 = 0.3256±0.0063 and cvis^2 = 0.336±0.039. The result is unsurprising, but it may help constraining some more exotic models of neutrino interactions.

To summarize, Planck continues to deliver disappointing results, and there's still more to follow ;)

Monday, 1 December 2014

After-weekend plot: new Planck limits on dark matter

The Planck collaboration just released updated results that include an input from their CMB polarization measurements. The most interesting are the new constraints on the annihilation cross section of dark matter:

Dark matter annihilation in the early universe injects energy into the primordial plasma and increases the ionization fraction. Planck is looking for imprints of that in the CMB temperature and polarization spectrum. The relevant parameters are the dark matter mass and <σv>*feff, where <σv> is the thermally averaged annihilation cross section during the recombination epoch, and feff ~0.2 accounts for the absorption efficiency. The new limits are a factor of 5 better than the latest ones from the WMAP satellite, and a factor of 2.5 better than the previous combined constraints.

What does it mean for us? In vanilla models of thermal WIMP dark matter <σv> = 3*10^-26 cm^3/sec, in which case dark matter particles with masses below ~10 GeV are excluded by Planck. Actually, in this mass range the Planck limits are far less stringent the ones obtained by the Fermi collaboration from gamma-ray observations of dwarf galaxies. However, the two are complementary to some extent. For example, Planck probes the annihilation cross section in the early universe, which can be different than today. Furthermore, the CMB constraints obviously do not depend on the distribution of dark matter in galaxies, which is a serious source of uncertainty for cosmic rays experiments. Finally, the CMB limits extend to higher dark matter masses where gamma-ray satellites lose sensitivity. The last point implies that Planck can weigh in on the PAMELA/AMS cosmic-ray positron excess. In models where the dark matter annihilation cross section during the recombination epoch is the same as today, the mass and cross section range that can explain the excess is excluded by Planck. Thus, the new results make it even more difficult to interpret the positron anomaly as a signal of dark matter.

Dark matter annihilation in the early universe injects energy into the primordial plasma and increases the ionization fraction. Planck is looking for imprints of that in the CMB temperature and polarization spectrum. The relevant parameters are the dark matter mass and <σv>*feff, where <σv> is the thermally averaged annihilation cross section during the recombination epoch, and feff ~0.2 accounts for the absorption efficiency. The new limits are a factor of 5 better than the latest ones from the WMAP satellite, and a factor of 2.5 better than the previous combined constraints.

What does it mean for us? In vanilla models of thermal WIMP dark matter <σv> = 3*10^-26 cm^3/sec, in which case dark matter particles with masses below ~10 GeV are excluded by Planck. Actually, in this mass range the Planck limits are far less stringent the ones obtained by the Fermi collaboration from gamma-ray observations of dwarf galaxies. However, the two are complementary to some extent. For example, Planck probes the annihilation cross section in the early universe, which can be different than today. Furthermore, the CMB constraints obviously do not depend on the distribution of dark matter in galaxies, which is a serious source of uncertainty for cosmic rays experiments. Finally, the CMB limits extend to higher dark matter masses where gamma-ray satellites lose sensitivity. The last point implies that Planck can weigh in on the PAMELA/AMS cosmic-ray positron excess. In models where the dark matter annihilation cross section during the recombination epoch is the same as today, the mass and cross section range that can explain the excess is excluded by Planck. Thus, the new results make it even more difficult to interpret the positron anomaly as a signal of dark matter.

Wednesday, 19 November 2014

Update on the bananas

One of the most interesting physics stories of this year was the discovery of an unidentified 3.5 keV x-ray emission line from galactic clusters. This so-called bulbulon can be interpreted as a signal of a sterile neutrino dark matter particle decaying into an active neutrino and a photon. Some time ago I wrote about the banana paper that questioned the dark matter origin of the signal. Much has happened since, and I owe you an update. The current experimental situation is summarized in this plot:

To be more specific, here's what's happening.

To clarify the situation we need more replies to comments on replies, and maybe also better data from future x-ray satellite missions. The significance of the detection depends, more than we'd wish, on dirty astrophysics involved in modeling the standard x-ray emission from galactic plasma. It seems unlikely that the sterile neutrino model with the originally reported parameters will stand, as it is in tension with several other analyses. The probability of the 3.5 keV signal being of dark matter origin is certainly much lower than a few months ago. But the jury is still out, and it's not impossible to imagine that more data and more analyses will tip the scales the other way.

Further reading: how to protect yourself from someone attacking you with a banana.

To be more specific, here's what's happening.

- Several groups searching for the 3.5 keV emission have reported negative results. One of those searched for the signal in dwarf galaxies, which offer a much cleaner environment allowing for a more reliable detection. No signal was found, although the limits do not exclude conclusively the original bulbulon claim. Another study looked for the signal in multiple galaxies. Again, no signal was found, but this time the reported limits are in severe tension with the sterile neutrino interpretation of the bulbulon. Yet another study failed to find the 3.5 keV line in Coma, Virgo and Ophiuchus clusters, although they detect it in the Perseus cluster. Finally, the banana group analyzed the morphology of the 3.5 keV emission from the Galactic center and Perseus and found it incompatible with dark matter decay.

- The discussion about the existence of the 3.5 keV emission from the Andromeda galaxy is ongoing. The conclusions seem to depend on the strategy to determine the continuum x-ray emission. Using data from the XMM satellite, the banana group fits the background in the 3-4 keV range and does not find the line, whereas this paper argues it is more kosher to fit in the 2-8 keV range, in which case the line can be detected in exactly the same dataset. It is not obvious who is right, although the fact that the significance of the signal depends so strongly on the background fitting procedure is not encouraging.

- The main battle rages on around K-XVIII (X-n stands for the X atom stripped of n-1 electrons; thus, K-XVIII is the potassium ion with 2 electrons). This little bastard has emission lines at 3.47 keV and 3.51 keV which could account for the bulbulon signal. In the original paper, the bulbuline group invokes a model of plasma emission that allows them to constrain the flux due to the K-XVIII emission from the measured ratios of the strong S-XVI/S-XV and Ca-XX/Ca-XIX lines. The banana paper argued that the bulbuline model is unrealistic as it gives inconsistent predictions for some plasma line ratios. The bulbuline group pointed out that the banana group used wrong numbers to estimate the line emission strenghts. The banana group maintains that their conclusions still hold when the error is corrected. It all boils down to the question whether the allowed range for the K-XVIII emission strength assumed by the bulbine group is conservative enough. Explaining the 3.5 keV feature solely by K-XVIII requires assuming element abundance ratios that are very different than the solar one, which may or may not be realistic.

- On the other hand, both groups have converged on the subject of chlorine. In the banana paper it was pointed out that the 3.5 keV line may be due to the Cl-XVII (hydrogen-like chlorine ion) Lyman-β transition which happens to be at 3.51 keV. However the bulbuline group subsequently derived limits on the corresponding Lyman-α line at 2.96 keV. From these limits, one can deduce in a fairly model-independent way that the contribution of Cl-XVII Lyman-β transition is negligible.

To clarify the situation we need more replies to comments on replies, and maybe also better data from future x-ray satellite missions. The significance of the detection depends, more than we'd wish, on dirty astrophysics involved in modeling the standard x-ray emission from galactic plasma. It seems unlikely that the sterile neutrino model with the originally reported parameters will stand, as it is in tension with several other analyses. The probability of the 3.5 keV signal being of dark matter origin is certainly much lower than a few months ago. But the jury is still out, and it's not impossible to imagine that more data and more analyses will tip the scales the other way.

Further reading: how to protect yourself from someone attacking you with a banana.

Saturday, 8 November 2014

Weekend Plot: Fermi and 7 dwarfs

This weekend the featured plot is borrowed from the presentation of Brandon Anderson at the symposium of the Fermi collaboration last week:

It shows the limits on the cross section of dark matter annihilation into b-quark pairs derived from gamma-ray observations of satellite galaxies of the Milky Way. These so-called dwarf galaxies are the most dark matter dominated objects known, which makes them a convenient place to search for dark matter. For example, WIMP dark matter annihilating into charged standard model particles would lead to an extended gamma-ray emission that could be spotted by the Fermi space telescope. Such emission coming from dwarf galaxies would be a smoking-gun signature of dark matter annihilation, given the relatively low level of dirty astrophysical backgrounds there (unlike in the center of our galaxy). Fermi has been looking for such signals, and a year ago they already published limits on the cross-section of dark matter annihilation into different final states. At the time, they also found a ~2 sigma excess that was intriguing, especially in conjunction with the observed gamma-ray excess from the center of our galaxy. Now Fermi is coming back with an updated analysis using more data and better calibration. The excess is largely gone and, for the bb final state, the new limits (blue) are 5 times stronger than the previous ones (black). For the theoretically favored WIMP annihilation cross section (horizontal dashed line), dark matter particle annihilating into b-quarks is excluded if its mass is below ~100 GeV. The new limits are in tension with the dark matter interpretation of the galactic center excess (various colorful rings, depending who you like). Of course, astrophysics is not an exact science, and by exploring numerous uncertainties one can soften the tension. What is more certain is that a smoking-gun signature of dark matter annihilation in dwarf galaxies is unlikely to be delivered in the foreseeable future.

It shows the limits on the cross section of dark matter annihilation into b-quark pairs derived from gamma-ray observations of satellite galaxies of the Milky Way. These so-called dwarf galaxies are the most dark matter dominated objects known, which makes them a convenient place to search for dark matter. For example, WIMP dark matter annihilating into charged standard model particles would lead to an extended gamma-ray emission that could be spotted by the Fermi space telescope. Such emission coming from dwarf galaxies would be a smoking-gun signature of dark matter annihilation, given the relatively low level of dirty astrophysical backgrounds there (unlike in the center of our galaxy). Fermi has been looking for such signals, and a year ago they already published limits on the cross-section of dark matter annihilation into different final states. At the time, they also found a ~2 sigma excess that was intriguing, especially in conjunction with the observed gamma-ray excess from the center of our galaxy. Now Fermi is coming back with an updated analysis using more data and better calibration. The excess is largely gone and, for the bb final state, the new limits (blue) are 5 times stronger than the previous ones (black). For the theoretically favored WIMP annihilation cross section (horizontal dashed line), dark matter particle annihilating into b-quarks is excluded if its mass is below ~100 GeV. The new limits are in tension with the dark matter interpretation of the galactic center excess (various colorful rings, depending who you like). Of course, astrophysics is not an exact science, and by exploring numerous uncertainties one can soften the tension. What is more certain is that a smoking-gun signature of dark matter annihilation in dwarf galaxies is unlikely to be delivered in the foreseeable future.

Sunday, 19 October 2014

Weekend Plot: Bs mixing phase update

Today's featured plot was released last week by the LHCb collaboration:

It shows the CP violating phase in Bs meson mixing, denoted as φs, versus the difference of the decay widths between the two Bs meson eigenstates. The interest in φs comes from the fact that it's one of the precious observables that 1) is allowed by the symmetries of the Standard Model, 2) is severely suppressed due to the CKM structure of flavor violation in the Standard Model. Such observables are a great place to look for new physics (other observables in this family include Bs/Bd→μμ, K→πνν, ...). New particles, even too heavy to be produced directly at the LHC, could produce measurable contributions to φs as long as they don't respect the Standard Model flavor structure. For example, a new force carrier with a mass as large as 100-1000 TeV and order 1 flavor- and CP-violating coupling to b and s quarks would be visible given the current experimental precision. Similarly, loops of supersymmetric particles with 10 TeV masses could show up, again if the flavor structure in the superpartner sector is not aligned with that in the Standard Model.

The phase φs can be measured in certain decays of neutral Bs mesons where the process involves an interference of direct decays and decays through oscillation into the anti-Bs meson. Several years ago measurements at Tevatron's D0 and CDF experiments suggested a large new physics contribution. The mild excess has gone away since, like many other such hints. The latest value quoted by LHCb is φs = - 0.010 ± 0.040, which combines earlier measurements of the Bs → J/ψ π+ π- and Bs → Ds+ Ds- decays with the brand new measurement of the Bs → J/ψ K+ K- decay. The experimental precision is already comparable to the Standard Model prediction of φs = - 0.036. Further progress is still possible, as the Standard Model prediction can be computed to a few percent accuracy. But the room for new physics here is getting tighter and tighter.

It shows the CP violating phase in Bs meson mixing, denoted as φs, versus the difference of the decay widths between the two Bs meson eigenstates. The interest in φs comes from the fact that it's one of the precious observables that 1) is allowed by the symmetries of the Standard Model, 2) is severely suppressed due to the CKM structure of flavor violation in the Standard Model. Such observables are a great place to look for new physics (other observables in this family include Bs/Bd→μμ, K→πνν, ...). New particles, even too heavy to be produced directly at the LHC, could produce measurable contributions to φs as long as they don't respect the Standard Model flavor structure. For example, a new force carrier with a mass as large as 100-1000 TeV and order 1 flavor- and CP-violating coupling to b and s quarks would be visible given the current experimental precision. Similarly, loops of supersymmetric particles with 10 TeV masses could show up, again if the flavor structure in the superpartner sector is not aligned with that in the Standard Model.

The phase φs can be measured in certain decays of neutral Bs mesons where the process involves an interference of direct decays and decays through oscillation into the anti-Bs meson. Several years ago measurements at Tevatron's D0 and CDF experiments suggested a large new physics contribution. The mild excess has gone away since, like many other such hints. The latest value quoted by LHCb is φs = - 0.010 ± 0.040, which combines earlier measurements of the Bs → J/ψ π+ π- and Bs → Ds+ Ds- decays with the brand new measurement of the Bs → J/ψ K+ K- decay. The experimental precision is already comparable to the Standard Model prediction of φs = - 0.036. Further progress is still possible, as the Standard Model prediction can be computed to a few percent accuracy. But the room for new physics here is getting tighter and tighter.

Saturday, 4 October 2014

Weekend Plot: Stealth stops exposed

This weekend we admire the new ATLAS limits on stops - hypothetical supersymmetric partners of the top quark:

For a stop promptly decaying to a top quark and an invisible neutralino, the new search excludes the mass range between m_top and 191 GeV. These numbers do not seem impressive at first sight, but let me explain why it's interesting.

No sign of SUSY at the LHC could mean that she is dead, or that she isresting hiding. Indeed, the current experimental coverage has several blind spots where supersymmetric particles, in spite of being produced in large numbers, induce too subtle signals in a detector to be easily spotted. For example, based on the observed distribution of events with a top-antitop quark pair accompanied by large missing momentum, ATLAS and CMS put the lower limit on the stop mass at around 750 GeV. However, these searches are inefficient if the stop mass is close to that of the top quark, 175-200 GeV (more generally, for m_top+m_neutralino ≈ m_stop). In this so-called stealth stop region, the momentum carried away by the neutralino is too small to distinguish stop production from the standard model process of top quark production. We need another trick to smoke out light stops. The ATLAS collaboration followed theorist's suggestion to use spin correlations. In the standard model, gluons couple either to 2 left-handed or to 2 right-handed quarks. This leads to a certain amount of correlation between the spins of the top and the antitop quark, which can be seen by looking at angular distributions of the decay products of the top quarks. If, on the other hand, a pair of top quarks originates from a decay of spin-0 stops, the spins of the pair are not correlated. ATLAS measured spin correlation in top pair production; in practice, they measured the distribution of the azimuthal angle between the two charged leptons in the events where both top quarks decay leptonically. As usual, they found it in a good agreement with the standard model prediction. This allows them to deduce that there cannot be too many stops polluting the top quark sample, and place the limit of 20 picobarns on the stop production cross section at the LHC, see the black line on the plot. Given the theoretical uncertainties, that cross section corresponds to the stop mass somewhere between 191 GeV and 202 GeV.

So, the stealth stop window is not completely closed yet, but we're getting there.

For a stop promptly decaying to a top quark and an invisible neutralino, the new search excludes the mass range between m_top and 191 GeV. These numbers do not seem impressive at first sight, but let me explain why it's interesting.

No sign of SUSY at the LHC could mean that she is dead, or that she is

So, the stealth stop window is not completely closed yet, but we're getting there.

Wednesday, 24 September 2014

BICEP: what was wrong and what was right

As you already know, Planck finally came out of the closet. The Monday paper shows that the galactic dust polarization fraction in the BICEP window is larger than predicted by pre-Planck models, as previously suggested by an independent theorist's analysis. As a result, the dust contribution to the B-mode power spectrum at moderate multipoles is about 4 times larger than estimated by BICEP. This implies that the dust alone can account for the signal strength reported by BICEP in March this year, without invoking a primordial component from the early universe. See the plot, borrowed from Kyle Helson's twitter, with overlaid BICEP data points and Planck's dust estimates. For a detailed discussion of Planck's paper I recommend reading other blogs who know better than me, see e.g. here or here or here. Instead, I will focus and the sociological and ontological aspects of the affair. There's no question that BICEP screwed up big time. But can we identify precisely which steps lead to the downfall, and which were a normal part of the scientific process? The story is complicated and there are many contradicting opinions, so to clarify it I will provide you with simple right or wrong answers :)

To conclude, BICEP goofed it up and deserves ridicule, in the same way a person slipping on a banana skin does. With some minimal precautions the mishap could have been avoided, or at least the damage could have been reduced. On the positive side, science worked once again, and we all learned something. Astrophysicists learned some exciting stuff about polarized dust in our galaxy. The public learned that science can get it wrong at times but is always self-correcting. And Andrei Linde learned to not open the door to a stranger with a backpack.

- BICEP Instrument: Right.

Whatever happened one should not forget that, at the instrumental level, BICEP was a huge success. The sensitivity to B-mode polarization at angular scales above a degree beats previous CMB experiments by an order of magnitude. Other experiments are following in their tracks, and we should soon obtain better limits on the tensor-to-scalar ratio. (Though it seems BICEP already comes close to the ultimate sensitivity for single-frequency ground-based experiment, given the dust pollution demonstrated by Planck). - ArXiv first: Right.

Some complained that the BICEP result were announced before the paper was accepted in a journal. True, peer-review is the pillar of science, but it does not mean we have to adhere to obsolete 20th century standards. The BICEP paper has undergone a thorough peer-review process of the best possible kind that included the whole community. It is highly unlikely the error would have been caught by a random journal referee. - Press conference: Right.

Many considered inappropriate that the release of the results was turned into a publicity stunt with a press conference, champagne, and YouTube videos. My opinion is that, as long as they believed the signal is robust, they had every right to throw a party, much like CERN did on the occasion of the Higgs discovery. In the end it didn't really matter. Given the importance of the discovery and how news spread over the blogosphere, the net effect on the public would be exactly the same if they just submitted to ArXiv. - Data scraping: Right.

There was a lot of indignation about the fact that, to estimate the dust polarization fraction in their field of view, BICEP used preliminary Planck data digitized from a slide in a conference presentation. I don't understand what's the problem. You should always use all publicly available relevant information; it's as simple as that. - Inflation spin: Wrong.

BICEP sold the discovery as the smoking-gun evidence for cosmic inflation. This narrative was picked by mainstream press, often mixing inflation with the big bang scenario. In reality, the primordial B-mode would be yet another evidence for inflation and a measurement of one crucial parameter - the energy density during inflation. This would be of course a huge thing, but apparently not big enough for PR departments. The damage is obvious: now that the result does not stand, the inflation picture and, by association, the whole big bang scenario is undermined in public perception. Now Guth and Linde cannot even dream of a Nobel prize, thanks to BICEP... - Quality control: Wrong.

Sure, everyone makes mistakes. But, from what I heard, that unfortunate analysis of the dust polarization fraction based on the Planck polarization data was performed by a single collaboration member and never cross-checked. I understand there's been some bad luck involved: the wrong estimate fell very close to the predictions of faulty pre-Planck dust models. But, for dog's sake, the whole Nobel-prize-worth discovery was hinging on that. There's nothing wrong with being wrong, but not double- and triple-checking crucial elements of the analysis is criminal. - Denial: Wrong.

The error in the estimate of the dust polarization fraction was understood soon after the initial announcement, and BICEP leaders were aware of it. Instead of biting the bullet, they chose a we-stand-by-our-results story. This resembled a child sweeping a broken vase under the sofa in the hope that no one would notice...

To conclude, BICEP goofed it up and deserves ridicule, in the same way a person slipping on a banana skin does. With some minimal precautions the mishap could have been avoided, or at least the damage could have been reduced. On the positive side, science worked once again, and we all learned something. Astrophysicists learned some exciting stuff about polarized dust in our galaxy. The public learned that science can get it wrong at times but is always self-correcting. And Andrei Linde learned to not open the door to a stranger with a backpack.

Friday, 19 September 2014

Dark matter or pulsars? AMS hints it's neither.

Yesterday AMS-02 updated their measurement of cosmic-ray positron and electron fluxes. The newly published data extend to positron energies 500 GeV, compared to 350 GeV in the previous release. The central value of the positron fraction in the highest energy bin is one third of the error bar lower than the central value of the next-to-highestbin. This allows the collaboration to conclude that the positron fraction has a maximum and starts to decrease at high energies :] The sloppy presentation and unnecessary hype obscures the fact that AMS actually found something non-trivial. Namely, it is interesting that the positron fraction, after a sharp rise between 10 and 200 GeV, seems to plateau at higher energies at the value around 15%. This sort of behavior, although not expected by popular models of cosmic ray propagation, was actually predicted a few years ago, well before AMS was launched.

Before I get to the point, let's have a brief summary. In 2008 the PAMELA experiment observed a steep rise of the cosmic ray positron fraction between 10 and 100 GeV. Positrons are routinely produced by scattering of high energy cosmic rays (secondary production), but the rise was not predicted by models of cosmic ray propagations. This prompted speculations of another (primary) source of positrons: from pulsars, supernovae or other astrophysical objects, to dark matter annihilation. The dark matter explanation is unlikely for many reasons. On the theoretical side, the large annihilation cross section required is difficult to achieve, and it is difficult to produce a large flux of positrons without producing an excess of antiprotons at the same time. In particular, the MSSM neutralino entertained in the last AMS paper certainly cannot fit the cosmic-ray data for these reasons. When theoretical obstacles are overcome by skillful model building, constraints from gamma ray and radio observations disfavor the relevant parameter space. Even if these constraints are dismissed due to large astrophysical uncertainties, the models poorly fit the shape the electron and positron spectrum observed by PAMELA, AMS, and FERMI (see the addendum of this paper for a recent discussion). Pulsars, on the other hand, are a plausible but handwaving explanation: we know they are all around and we know they produce electron-positron pairs in the magnetosphere, but we cannot calculate the spectrum from first principles.

But maybe primary positron sources are not needed at all? The old paper by Katz et al. proposes a different approach. Rather than starting with a particular propagation model, it assumes the high-energy positrons observed by PAMELA are secondary, and attempts to deduce from the data the parameters controlling the propagation of cosmic rays. The logic is based on two premises. Firstly, while production of cosmic rays in our galaxy contains many unknowns, the production of different particles is strongly correlated, with the relative ratios depending on nuclear cross sections that are measurable in laboratories. Secondly, different particles propagate in the magnetic field of the galaxy in the same way, depending only on their rigidity (momentum divided by charge). Thus, from an observed flux of one particle, one can predict the production rate of other particles. This approach is quite successful in predicting the cosmic antiproton flux based on the observed boron flux. For positrons, the story is more complicated because of large energy losses (cooling) due to synchrotron and inverse-Compton processes. However, in this case one can make the exercise of computing the positron flux assuming no losses at all. The result correspond to roughly 20% positron fraction above 100 GeV. Since in the real world cooling can only suppress the positron flux, the value computed assuming no cooling represents an upper bound on the positron fraction.

Now, at lower energies, the observed positron flux is a factor of a few below the upper bound. This is already intriguing, as hypothetical primary positrons could in principle have an arbitrary flux, orders of magnitude larger or smaller than this upper bound. The rise observed by PAMELA can be interpreted that the suppression due to cooling decreases as positron energy increases. This is not implausible: the suppression depends on the interplay of the cooling time and mean propagation time of positrons, both of which are unknown functions of energy. Once the cooling time exceeds the propagation time the suppression factor is completely gone. In such a case the positron fraction should saturate the upper limit. This is what seems to be happening at the energies 200-500 GeV probed by AMS, as can be seen in the plot. Already the previous AMS data were consistent with this picture, and the latest update only strengthens it.

So, it may be that the mystery of cosmic ray positrons has a simple down-to-galactic-disc explanation. If further observations show the positron flux climbing above the upper limit or dropping suddenly, then the secondary production hypothesis would be invalidated. But, for the moment, the AMS data seems to be consistent with no primary sources, just assuming that the cooling time of positrons is shorter than predicted by the state-of-the-art propagation models. So, instead of dark matter, AMS might have discovered models of cosmic-ray propagation need a fix. That's less spectacular, but still worthwhile.

Thanks to Kfir for the plot and explanations.

Before I get to the point, let's have a brief summary. In 2008 the PAMELA experiment observed a steep rise of the cosmic ray positron fraction between 10 and 100 GeV. Positrons are routinely produced by scattering of high energy cosmic rays (secondary production), but the rise was not predicted by models of cosmic ray propagations. This prompted speculations of another (primary) source of positrons: from pulsars, supernovae or other astrophysical objects, to dark matter annihilation. The dark matter explanation is unlikely for many reasons. On the theoretical side, the large annihilation cross section required is difficult to achieve, and it is difficult to produce a large flux of positrons without producing an excess of antiprotons at the same time. In particular, the MSSM neutralino entertained in the last AMS paper certainly cannot fit the cosmic-ray data for these reasons. When theoretical obstacles are overcome by skillful model building, constraints from gamma ray and radio observations disfavor the relevant parameter space. Even if these constraints are dismissed due to large astrophysical uncertainties, the models poorly fit the shape the electron and positron spectrum observed by PAMELA, AMS, and FERMI (see the addendum of this paper for a recent discussion). Pulsars, on the other hand, are a plausible but handwaving explanation: we know they are all around and we know they produce electron-positron pairs in the magnetosphere, but we cannot calculate the spectrum from first principles.

But maybe primary positron sources are not needed at all? The old paper by Katz et al. proposes a different approach. Rather than starting with a particular propagation model, it assumes the high-energy positrons observed by PAMELA are secondary, and attempts to deduce from the data the parameters controlling the propagation of cosmic rays. The logic is based on two premises. Firstly, while production of cosmic rays in our galaxy contains many unknowns, the production of different particles is strongly correlated, with the relative ratios depending on nuclear cross sections that are measurable in laboratories. Secondly, different particles propagate in the magnetic field of the galaxy in the same way, depending only on their rigidity (momentum divided by charge). Thus, from an observed flux of one particle, one can predict the production rate of other particles. This approach is quite successful in predicting the cosmic antiproton flux based on the observed boron flux. For positrons, the story is more complicated because of large energy losses (cooling) due to synchrotron and inverse-Compton processes. However, in this case one can make the exercise of computing the positron flux assuming no losses at all. The result correspond to roughly 20% positron fraction above 100 GeV. Since in the real world cooling can only suppress the positron flux, the value computed assuming no cooling represents an upper bound on the positron fraction.

Now, at lower energies, the observed positron flux is a factor of a few below the upper bound. This is already intriguing, as hypothetical primary positrons could in principle have an arbitrary flux, orders of magnitude larger or smaller than this upper bound. The rise observed by PAMELA can be interpreted that the suppression due to cooling decreases as positron energy increases. This is not implausible: the suppression depends on the interplay of the cooling time and mean propagation time of positrons, both of which are unknown functions of energy. Once the cooling time exceeds the propagation time the suppression factor is completely gone. In such a case the positron fraction should saturate the upper limit. This is what seems to be happening at the energies 200-500 GeV probed by AMS, as can be seen in the plot. Already the previous AMS data were consistent with this picture, and the latest update only strengthens it.

So, it may be that the mystery of cosmic ray positrons has a simple down-to-galactic-disc explanation. If further observations show the positron flux climbing above the upper limit or dropping suddenly, then the secondary production hypothesis would be invalidated. But, for the moment, the AMS data seems to be consistent with no primary sources, just assuming that the cooling time of positrons is shorter than predicted by the state-of-the-art propagation models. So, instead of dark matter, AMS might have discovered models of cosmic-ray propagation need a fix. That's less spectacular, but still worthwhile.

Thanks to Kfir for the plot and explanations.

Sunday, 7 September 2014

Weekend Plot: ultimate demise of diphoton Higgs excess

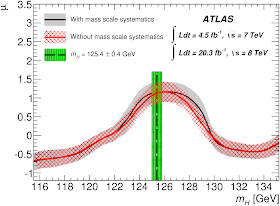

This weekend's plot is the latest ATLAS measurement of the Higgs signal strength μ in the diphoton channel:

Together with the CMS paper posted earlier this summer, this is probably the final word on Higgs-to-2-photons decays in the LHC run-I. These measurements have had an eventful history. The diphoton final state was one of the Higgs discovery channels back in 2012. Initially, both ATLAS and CMS were seeing a large excess of the Higgs signal strength compared to the standard model prediction. That was very exciting, as it was hinting at new charged particles with masses near 100 GeV. But in nature, sooner or later, everything has to converge to the standard model. ATLAS and CMS chose different strategies to get there. In CMS, the central value μ(t) displays an oscillatory behavior, alternating between excess and deficit. Each iteration brings it closer to the standard model limit μ = 1, with the latest reported value of μ= 1.14 ± 0.26. In ATLAS, on the other hand, μ(t) decreases monotonically, from μ = 1.8 ± 0.5 in summer 2012 down to μ = 1.17 ± 0.27 today (that precise value corresponds to the Higgs mass of 125.4 GeV, but from the plot one can see that the signal strength is similar anywhere in the 125-126 GeV range). At the end of the day, both strategies have led to almost identical answers :)

Together with the CMS paper posted earlier this summer, this is probably the final word on Higgs-to-2-photons decays in the LHC run-I. These measurements have had an eventful history. The diphoton final state was one of the Higgs discovery channels back in 2012. Initially, both ATLAS and CMS were seeing a large excess of the Higgs signal strength compared to the standard model prediction. That was very exciting, as it was hinting at new charged particles with masses near 100 GeV. But in nature, sooner or later, everything has to converge to the standard model. ATLAS and CMS chose different strategies to get there. In CMS, the central value μ(t) displays an oscillatory behavior, alternating between excess and deficit. Each iteration brings it closer to the standard model limit μ = 1, with the latest reported value of μ= 1.14 ± 0.26. In ATLAS, on the other hand, μ(t) decreases monotonically, from μ = 1.8 ± 0.5 in summer 2012 down to μ = 1.17 ± 0.27 today (that precise value corresponds to the Higgs mass of 125.4 GeV, but from the plot one can see that the signal strength is similar anywhere in the 125-126 GeV range). At the end of the day, both strategies have led to almost identical answers :)

Tuesday, 12 August 2014

X-ray bananas

This year's discoveries follow the well-known 5-stage Kübler-Ross pattern: 1) announcement, 2) excitement, 3) debunking, 4) confusion, 5) depression. While BICEP is approaching the end of the cycle, the sterile neutrino dark matter signal reported earlier this year is now entering stage 3. This is thanks to yesterday's paper entitled Dark matter searches going bananas by Tesla Jeltena and Stefano Profumo (to my surprise, this is not the first banana in a physics paper's title).

In the previous episode, two independent analyses using public data from XMM and Chandra satellites concluded the presence of an anomalous 3.55 keV monochromatic emission from galactic clusters and Andromeda. One possible interpretation is a 7.1 keV sterile neutrino dark matter decaying to a photon and a standard neutrino. If the signal could be confirmed and conventional explanations (via known atomic emission lines) could be excluded, it would mean we are close to solving the dark matter puzzle.

It seems this is not gonna happen. The new paper makes two claims:

Let's begin with the first claim. The authors analyze several days of XMM observations of the Milky Way center. They find that the observed spectrum can be very well fit by known plasma emission lines. In particular, all spectral features near 3.5 keV are accounted for if Potassium XVIII lines at 3.48 and 3.52 keV are included in the fit. Based on that agreement, they can derive strong bounds on the parameters of the sterile neutrino dark matter model: the mixing angle between the sterile and the standard neutrino should satisfy sin^2(2θ) ≤ 2*10^-11. This excludes the parameter space favored by the previous detection of the 3.55 keV line in galactic clusters. The conclusions are similar, and even somewhat stronger, as in the earlier analysis using Chandra data.

Let's begin with the first claim. The authors analyze several days of XMM observations of the Milky Way center. They find that the observed spectrum can be very well fit by known plasma emission lines. In particular, all spectral features near 3.5 keV are accounted for if Potassium XVIII lines at 3.48 and 3.52 keV are included in the fit. Based on that agreement, they can derive strong bounds on the parameters of the sterile neutrino dark matter model: the mixing angle between the sterile and the standard neutrino should satisfy sin^2(2θ) ≤ 2*10^-11. This excludes the parameter space favored by the previous detection of the 3.55 keV line in galactic clusters. The conclusions are similar, and even somewhat stronger, as in the earlier analysis using Chandra data.

This is disappointing but not a disaster yet, as there are alternative dark matter models (e.g. axions converting to photons in the magnetic field of a galaxy) that do not predict observable emission lines from our galaxy. But there's one important corollary of the new analysis. It seems that the inferred strength of the Potassium XVIII lines compared to the strength of other atomic lines does not agree well with theoretical models of plasma emission. Such models were an important ingredient in the previous analyses that found the signal. In particular, the original 3.55 keV detection paper assumed upper limits on the strength of the Potassium XVIII line derived from the observed strength of the Sulfur XVI line. But the new findings suggest that systematic errors may have been underestimated. Allowing for a higher flux of Potassium XVIII, and also including the 3.51 Chlorine XVII line (that was missed in the previous analyses), one can a obtain a good fit to the observed x-ray spectrum from galactic clusters, without introducing a dark matter emission line. Right... we suspected something was smelling bad here, and now we know it was chlorine... Finally, the new paper reanalyses the x-ray spectrum from Andromeda, but it disagrees with the previous findings: there's a hint of the 3.53 keV anomalous emission line from Andromeda, but its significance is merely 1 sigma.

So, the putative dark matter signals are dropping like flies these days. We urgently need new ones to replenish my graph ;)

Note added: While finalizing this post I became aware of today's paper that, using the same data, DOES find a 3.55 keV line from the Milky Way center. So we're already at stage 4... seems that the devil is in the details how you model the potassium lines (which, frankly speaking, is not reassuring).

In the previous episode, two independent analyses using public data from XMM and Chandra satellites concluded the presence of an anomalous 3.55 keV monochromatic emission from galactic clusters and Andromeda. One possible interpretation is a 7.1 keV sterile neutrino dark matter decaying to a photon and a standard neutrino. If the signal could be confirmed and conventional explanations (via known atomic emission lines) could be excluded, it would mean we are close to solving the dark matter puzzle.

It seems this is not gonna happen. The new paper makes two claims:

- Limits from x-ray observations of the Milky Way center exclude the sterile neutrino interpretation of the reported signal from galactic clusters.

- In any case, there's no significant anomalous emission line from galactic clusters near 3.55 keV.

Let's begin with the first claim. The authors analyze several days of XMM observations of the Milky Way center. They find that the observed spectrum can be very well fit by known plasma emission lines. In particular, all spectral features near 3.5 keV are accounted for if Potassium XVIII lines at 3.48 and 3.52 keV are included in the fit. Based on that agreement, they can derive strong bounds on the parameters of the sterile neutrino dark matter model: the mixing angle between the sterile and the standard neutrino should satisfy sin^2(2θ) ≤ 2*10^-11. This excludes the parameter space favored by the previous detection of the 3.55 keV line in galactic clusters. The conclusions are similar, and even somewhat stronger, as in the earlier analysis using Chandra data.

Let's begin with the first claim. The authors analyze several days of XMM observations of the Milky Way center. They find that the observed spectrum can be very well fit by known plasma emission lines. In particular, all spectral features near 3.5 keV are accounted for if Potassium XVIII lines at 3.48 and 3.52 keV are included in the fit. Based on that agreement, they can derive strong bounds on the parameters of the sterile neutrino dark matter model: the mixing angle between the sterile and the standard neutrino should satisfy sin^2(2θ) ≤ 2*10^-11. This excludes the parameter space favored by the previous detection of the 3.55 keV line in galactic clusters. The conclusions are similar, and even somewhat stronger, as in the earlier analysis using Chandra data.This is disappointing but not a disaster yet, as there are alternative dark matter models (e.g. axions converting to photons in the magnetic field of a galaxy) that do not predict observable emission lines from our galaxy. But there's one important corollary of the new analysis. It seems that the inferred strength of the Potassium XVIII lines compared to the strength of other atomic lines does not agree well with theoretical models of plasma emission. Such models were an important ingredient in the previous analyses that found the signal. In particular, the original 3.55 keV detection paper assumed upper limits on the strength of the Potassium XVIII line derived from the observed strength of the Sulfur XVI line. But the new findings suggest that systematic errors may have been underestimated. Allowing for a higher flux of Potassium XVIII, and also including the 3.51 Chlorine XVII line (that was missed in the previous analyses), one can a obtain a good fit to the observed x-ray spectrum from galactic clusters, without introducing a dark matter emission line. Right... we suspected something was smelling bad here, and now we know it was chlorine... Finally, the new paper reanalyses the x-ray spectrum from Andromeda, but it disagrees with the previous findings: there's a hint of the 3.53 keV anomalous emission line from Andromeda, but its significance is merely 1 sigma.

So, the putative dark matter signals are dropping like flies these days. We urgently need new ones to replenish my graph ;)

Note added: While finalizing this post I became aware of today's paper that, using the same data, DOES find a 3.55 keV line from the Milky Way center. So we're already at stage 4... seems that the devil is in the details how you model the potassium lines (which, frankly speaking, is not reassuring).

Wednesday, 23 July 2014

Higgs Recap

On the occasion of summer conferences the LHC experiments dumped a large number of new Higgs results. Most of them have already been advertised on blogs, see e.g. here or here or here. In case you missed anything, here I summarize the most interesting updates of the last few weeks.

1. Mass measurements.

Both ATLAS and CMS recently presented improved measurements of the Higgs boson mass in the diphoton and 4-lepton final states. The errors shrink to 400 MeV in ATLAS and 300 MeV in CMS. The news is that Higgs has lost some weight (the boson, not Peter). A naive combination of the ATLAS and CMS results yields the central value 125.15 GeV. The profound consequence is that, for another year at least, we will call it the 125 GeV particle, rather than the 125.5 GeV particle as before ;)

While the central values of the Higgs mass combinations quoted by ATLAS and CMS are very close, 125.36 vs 125.03 GeV, the individual inputs are still a bit apart from each other. Although the consistency of the ATLAS measurements in the diphoton and 4-lepton channels has improved, these two independent mass determinations differ by 1.5 GeV, which corresponds to a 2 sigma tension. Furthermore, the central values of the Higgs mass quoted by ATLAS and CMS differ by 1.3 GeV in the diphoton channel and by 1.1 in the 4-lepton channel, which also amount to 2 sigmish discrepancies. This could be just bad luck, or maybe the systematic errors are slightly larger than the experimentalists think.

2. Diphoton rate update.

CMS finally released a new value of the Higgs signal strength in the diphoton channel. This CMS measurement was a bit of a roller-coaster: initially they measured an excess, then with the full dataset they reported a small deficit. After more work and more calibration they settled to the value 1.14+0.26-0.23 relative to the standard model prediction, in perfect agreement with the standard model. Meanwhile ATLAS is also revising the signal strength in this channel towards the standard model value. The number 1.29±0.30 quoted on the occasion of the mass measurement is not yet the final one; there will soon be a dedicated signal strength measurement with, most likely, a slightly smaller error. Nevertheless, we can safely announce that the celebrated Higgs diphoton excess is no more.

3. Off-shell Higgs.

Most of the LHC searches are concerned with an on-shell Higgs, that is when its 4-momentum squared is very close to its mass. This is where Higgs is most easily recognizable, since it can show as a bump in invariant mass distributions. However Higgs, like any quantum particle, can also appear as a virtual particle off-mass-shell and influence, in a subtler way, the cross section or differential distributions of various processes. One place where an off-shell Higgs may visible contribute is the pair production of on-shell Z bosons. In this case, the interference between gluon-gluon → Higgs → Z Z process and the non-Higgs one-loop Standard Model contribution to gluon-gluon → Z Z process can influence the cross section in a non-negligible way. At the beginning, these off-shell measurements were advertised as a model-independent Higgs width measurement, although now it is recognized the "model-independent" claim does not stand. Nevertheless, measuring the ratio of the off-shell and on-shell Higgs production provides qualitatively new information about the Higgs couplings and, under some specific assumptions, can be interpreted an indirect constraint on the Higgs width. Now both ATLAS and CMS quote the constraints on the Higgs width at the level of 5 times the Standard Model value. Currently, these results are not very useful in practice. Indeed, it would require a tremendous conspiracy to reconcile the current data with the Higgs width larger than 1.3 the standard model one. But a new front has been opened, and one hopes for much more interesting results in the future.

Most of the LHC searches are concerned with an on-shell Higgs, that is when its 4-momentum squared is very close to its mass. This is where Higgs is most easily recognizable, since it can show as a bump in invariant mass distributions. However Higgs, like any quantum particle, can also appear as a virtual particle off-mass-shell and influence, in a subtler way, the cross section or differential distributions of various processes. One place where an off-shell Higgs may visible contribute is the pair production of on-shell Z bosons. In this case, the interference between gluon-gluon → Higgs → Z Z process and the non-Higgs one-loop Standard Model contribution to gluon-gluon → Z Z process can influence the cross section in a non-negligible way. At the beginning, these off-shell measurements were advertised as a model-independent Higgs width measurement, although now it is recognized the "model-independent" claim does not stand. Nevertheless, measuring the ratio of the off-shell and on-shell Higgs production provides qualitatively new information about the Higgs couplings and, under some specific assumptions, can be interpreted an indirect constraint on the Higgs width. Now both ATLAS and CMS quote the constraints on the Higgs width at the level of 5 times the Standard Model value. Currently, these results are not very useful in practice. Indeed, it would require a tremendous conspiracy to reconcile the current data with the Higgs width larger than 1.3 the standard model one. But a new front has been opened, and one hopes for much more interesting results in the future.

4. Tensor structure of Higgs couplings.

Another front that is being opened as we speak is constraining higher order Higgs couplings with a different tensor structure. So far, we have been given the so-called spin/parity measurements. That is to say, the LHC experiments imagine a 125 GeV particle with a different spin and/or parity than the Higgs, and the couplings to matter consistent with that hypothesis. Than they test whether this new particle or the standard model Higgs better describes the observed differential distributions of Higgs decay products. This has some appeal to general public and nobel committees but little practical meaning. That's because the current data, especially the Higgs signal strength measured in multiple channels, clearly show that the Higgs is, in the first approximation, the standard model one. New physics, if exists, may only be a small perturbation on top of the standard model couplings. The relevant question is how well we can constrain these perturbations. For example, possible couplings of the Higgs to the Z boson are

In the standard model only the first type of coupling is present in the Lagrangian, and all the a coefficients are zero. New heavy particles coupled to the Higgs and Z bosons could be indirectly detected by measuring non-zero a's, In particular, a3 violates the parity symmetry and could arise from mixing of the standard model Higgs with a pseudoscalar particle. The presence of non-zero a's would show up, for example, as a modification of the lepton momentum distributions in the Higgs decay to 4 leptons. This was studied by CMS in this note. What they do is not perfect yet, and the results are presented in an unnecessarily complicated fashion. In any case it's a step in the right direction: as the analysis improves and more statistics is accumulated in the next runs these measurements will become an important probe of new physics.

5. Flavor violating decays.

In the standard model, the Higgs couplings conserve flavor, in both the quark and the lepton sectors. This is a consequence of the assumption that the theory is renormalizable and that only 1 Higgs field is present. If either of these assumptions is violated, the Higgs boson may mediate transitions between different generations of matter. Earlier, ATLAS and CMS searched for top quark decay to charm and Higgs. More recently, CMS turned to lepton flavor violation, searching for Higgs decays to τμ pairs. This decay cannot occur in the standard model, so the search is a clean null test. At the same time, the final state is relatively simple from the experimental point of view, thus this decay may be a sensitive probe of new physics. Amusingly, CMS sees a 2.5 sigma significant excess corresponding to the h→τμ branching fraction of order 1%. So we can entertain a possibility that Higgs holds the key to new physics and flavor hierarchies, at least until ATLAS comes out with its own measurement.

1. Mass measurements.

Both ATLAS and CMS recently presented improved measurements of the Higgs boson mass in the diphoton and 4-lepton final states. The errors shrink to 400 MeV in ATLAS and 300 MeV in CMS. The news is that Higgs has lost some weight (the boson, not Peter). A naive combination of the ATLAS and CMS results yields the central value 125.15 GeV. The profound consequence is that, for another year at least, we will call it the 125 GeV particle, rather than the 125.5 GeV particle as before ;)

While the central values of the Higgs mass combinations quoted by ATLAS and CMS are very close, 125.36 vs 125.03 GeV, the individual inputs are still a bit apart from each other. Although the consistency of the ATLAS measurements in the diphoton and 4-lepton channels has improved, these two independent mass determinations differ by 1.5 GeV, which corresponds to a 2 sigma tension. Furthermore, the central values of the Higgs mass quoted by ATLAS and CMS differ by 1.3 GeV in the diphoton channel and by 1.1 in the 4-lepton channel, which also amount to 2 sigmish discrepancies. This could be just bad luck, or maybe the systematic errors are slightly larger than the experimentalists think.

2. Diphoton rate update.

CMS finally released a new value of the Higgs signal strength in the diphoton channel. This CMS measurement was a bit of a roller-coaster: initially they measured an excess, then with the full dataset they reported a small deficit. After more work and more calibration they settled to the value 1.14+0.26-0.23 relative to the standard model prediction, in perfect agreement with the standard model. Meanwhile ATLAS is also revising the signal strength in this channel towards the standard model value. The number 1.29±0.30 quoted on the occasion of the mass measurement is not yet the final one; there will soon be a dedicated signal strength measurement with, most likely, a slightly smaller error. Nevertheless, we can safely announce that the celebrated Higgs diphoton excess is no more.

3. Off-shell Higgs.

Most of the LHC searches are concerned with an on-shell Higgs, that is when its 4-momentum squared is very close to its mass. This is where Higgs is most easily recognizable, since it can show as a bump in invariant mass distributions. However Higgs, like any quantum particle, can also appear as a virtual particle off-mass-shell and influence, in a subtler way, the cross section or differential distributions of various processes. One place where an off-shell Higgs may visible contribute is the pair production of on-shell Z bosons. In this case, the interference between gluon-gluon → Higgs → Z Z process and the non-Higgs one-loop Standard Model contribution to gluon-gluon → Z Z process can influence the cross section in a non-negligible way. At the beginning, these off-shell measurements were advertised as a model-independent Higgs width measurement, although now it is recognized the "model-independent" claim does not stand. Nevertheless, measuring the ratio of the off-shell and on-shell Higgs production provides qualitatively new information about the Higgs couplings and, under some specific assumptions, can be interpreted an indirect constraint on the Higgs width. Now both ATLAS and CMS quote the constraints on the Higgs width at the level of 5 times the Standard Model value. Currently, these results are not very useful in practice. Indeed, it would require a tremendous conspiracy to reconcile the current data with the Higgs width larger than 1.3 the standard model one. But a new front has been opened, and one hopes for much more interesting results in the future.

Most of the LHC searches are concerned with an on-shell Higgs, that is when its 4-momentum squared is very close to its mass. This is where Higgs is most easily recognizable, since it can show as a bump in invariant mass distributions. However Higgs, like any quantum particle, can also appear as a virtual particle off-mass-shell and influence, in a subtler way, the cross section or differential distributions of various processes. One place where an off-shell Higgs may visible contribute is the pair production of on-shell Z bosons. In this case, the interference between gluon-gluon → Higgs → Z Z process and the non-Higgs one-loop Standard Model contribution to gluon-gluon → Z Z process can influence the cross section in a non-negligible way. At the beginning, these off-shell measurements were advertised as a model-independent Higgs width measurement, although now it is recognized the "model-independent" claim does not stand. Nevertheless, measuring the ratio of the off-shell and on-shell Higgs production provides qualitatively new information about the Higgs couplings and, under some specific assumptions, can be interpreted an indirect constraint on the Higgs width. Now both ATLAS and CMS quote the constraints on the Higgs width at the level of 5 times the Standard Model value. Currently, these results are not very useful in practice. Indeed, it would require a tremendous conspiracy to reconcile the current data with the Higgs width larger than 1.3 the standard model one. But a new front has been opened, and one hopes for much more interesting results in the future.4. Tensor structure of Higgs couplings.

Another front that is being opened as we speak is constraining higher order Higgs couplings with a different tensor structure. So far, we have been given the so-called spin/parity measurements. That is to say, the LHC experiments imagine a 125 GeV particle with a different spin and/or parity than the Higgs, and the couplings to matter consistent with that hypothesis. Than they test whether this new particle or the standard model Higgs better describes the observed differential distributions of Higgs decay products. This has some appeal to general public and nobel committees but little practical meaning. That's because the current data, especially the Higgs signal strength measured in multiple channels, clearly show that the Higgs is, in the first approximation, the standard model one. New physics, if exists, may only be a small perturbation on top of the standard model couplings. The relevant question is how well we can constrain these perturbations. For example, possible couplings of the Higgs to the Z boson are

In the standard model only the first type of coupling is present in the Lagrangian, and all the a coefficients are zero. New heavy particles coupled to the Higgs and Z bosons could be indirectly detected by measuring non-zero a's, In particular, a3 violates the parity symmetry and could arise from mixing of the standard model Higgs with a pseudoscalar particle. The presence of non-zero a's would show up, for example, as a modification of the lepton momentum distributions in the Higgs decay to 4 leptons. This was studied by CMS in this note. What they do is not perfect yet, and the results are presented in an unnecessarily complicated fashion. In any case it's a step in the right direction: as the analysis improves and more statistics is accumulated in the next runs these measurements will become an important probe of new physics.

5. Flavor violating decays.

In the standard model, the Higgs couplings conserve flavor, in both the quark and the lepton sectors. This is a consequence of the assumption that the theory is renormalizable and that only 1 Higgs field is present. If either of these assumptions is violated, the Higgs boson may mediate transitions between different generations of matter. Earlier, ATLAS and CMS searched for top quark decay to charm and Higgs. More recently, CMS turned to lepton flavor violation, searching for Higgs decays to τμ pairs. This decay cannot occur in the standard model, so the search is a clean null test. At the same time, the final state is relatively simple from the experimental point of view, thus this decay may be a sensitive probe of new physics. Amusingly, CMS sees a 2.5 sigma significant excess corresponding to the h→τμ branching fraction of order 1%. So we can entertain a possibility that Higgs holds the key to new physics and flavor hierarchies, at least until ATLAS comes out with its own measurement.

Saturday, 19 July 2014

Weekend Plot: Prodigal CRESST

CRESST is one of the dark matter direct detection experiments seeing an excess which may be interpreted as a signal of a fairly light (order 10 GeV) dark matter particle. Or it was... This week they posted a new paper reporting on new data collected last year with an upgraded detector. Farewell CRESST signal, welcome CRESST limits:

The new limits (red line) exclude most of the region of the parameter space favored by the previous CRESST excess: M1 and M2 in the plot. Of course, these regions have never been taken at face value because they are excluded by orders of magnitude by the LUX, Xenon, and CDMS experiments. Nevertheless, the excess was pointing to similar dark matter mass as the signals reported DAMA, CoGeNT, and CDMS-Si (other color stains), which prompted many to speculate a common origin of all these anomalies. Now the excess is gone. Instead, CRESST emerges as an interesting player in the race toward the neutrino wall. Their target material - CaWO4 crystals - contains oxygen nuclei which, due their small masses, are well suited for detecting light dark matter. The kink in the limits curve near 5 GeV is the point below which dark-matter-induced recoil events would be dominated by scattering on oxygen. Currently, CRESST has world's best limits for dark matter masses between 2 and 3 GeV, beating DAMIC (not shown in the plot) and CDMSlite (dashed green line).

The new limits (red line) exclude most of the region of the parameter space favored by the previous CRESST excess: M1 and M2 in the plot. Of course, these regions have never been taken at face value because they are excluded by orders of magnitude by the LUX, Xenon, and CDMS experiments. Nevertheless, the excess was pointing to similar dark matter mass as the signals reported DAMA, CoGeNT, and CDMS-Si (other color stains), which prompted many to speculate a common origin of all these anomalies. Now the excess is gone. Instead, CRESST emerges as an interesting player in the race toward the neutrino wall. Their target material - CaWO4 crystals - contains oxygen nuclei which, due their small masses, are well suited for detecting light dark matter. The kink in the limits curve near 5 GeV is the point below which dark-matter-induced recoil events would be dominated by scattering on oxygen. Currently, CRESST has world's best limits for dark matter masses between 2 and 3 GeV, beating DAMIC (not shown in the plot) and CDMSlite (dashed green line).

Sunday, 15 June 2014

Weekend Plot: BaBar vs Dark Force

BaBar was an experiment studying 10 GeV electron-positron collisions. The collider is long gone, but interesting results keep appearing from time to time. Obviously, this is not a place to discover new heavy particles. However, due to the large luminosity and clean experimental environment, BaBar is well equipped to look for light and very weakly coupled particles that can easily escape detection in bigger but dirtier machines like the LHC. Today's weekend plot is the new BaBar limits on dark photons:

Dark photon is a hypothetical spin-1 boson that couples to other particles with the strength proportional to their electric charges. Compared to the ordinary photon, the dark one is assumed to have a non-zero mass mA' and the coupling strength suppressed by the factor ε. If ε is small enough the dark photon can escape detection even if mA' is very small, in the MeV or GeV range. The model was conceived long ago, but in the previous decade it has gained wider popularity as the leading explanation of the PAMELA anomaly. Now, as PAMELA is getting older, she is no longer considered a convincing evidence of new physics. But the dark photon model remains an important benchmark - a sort of spherical cow model for light hidden sectors. Indeed, in the simplest realization, the model is fully described by just two parameters: mA' and ε, which makes it easy to present and compare results of different searches.

In electron-positron collisions one can produce a dark photon in association with an ordinary photon, in analogy to the familiar process of e+e- annihilation into 2 photons. The dark photon then decays to a pair of electrons or muons (or heavier charged particles, if they are kinematically available). Thus, the signature is a spike in the e+e- or μ+μ- invariant mass spectrum of γl+l- events. BaBar performed this search to obtain world's best limits on dark photons in the mass range 30 MeV - 10 GeV, with the upper limit on ε in the 0.001 ballpark. This does not have direct consequences for the explanation of the PAMELA anomaly, as the model works with a smaller ε too. On the other hand, the new results close in on the parameter space where the minimal dark photon model can explain the muon magnetic moment anomaly (although one should be aware that one can reduce the tension with a trivial modification of the model, by allowing the dark photon to decay into the hidden sector).

So, no luck so far, we need to search further. What one should retain is that finding new heavy particles and finding new light weakly interacting particles seems equally probable at this point :)

Dark photon is a hypothetical spin-1 boson that couples to other particles with the strength proportional to their electric charges. Compared to the ordinary photon, the dark one is assumed to have a non-zero mass mA' and the coupling strength suppressed by the factor ε. If ε is small enough the dark photon can escape detection even if mA' is very small, in the MeV or GeV range. The model was conceived long ago, but in the previous decade it has gained wider popularity as the leading explanation of the PAMELA anomaly. Now, as PAMELA is getting older, she is no longer considered a convincing evidence of new physics. But the dark photon model remains an important benchmark - a sort of spherical cow model for light hidden sectors. Indeed, in the simplest realization, the model is fully described by just two parameters: mA' and ε, which makes it easy to present and compare results of different searches.

In electron-positron collisions one can produce a dark photon in association with an ordinary photon, in analogy to the familiar process of e+e- annihilation into 2 photons. The dark photon then decays to a pair of electrons or muons (or heavier charged particles, if they are kinematically available). Thus, the signature is a spike in the e+e- or μ+μ- invariant mass spectrum of γl+l- events. BaBar performed this search to obtain world's best limits on dark photons in the mass range 30 MeV - 10 GeV, with the upper limit on ε in the 0.001 ballpark. This does not have direct consequences for the explanation of the PAMELA anomaly, as the model works with a smaller ε too. On the other hand, the new results close in on the parameter space where the minimal dark photon model can explain the muon magnetic moment anomaly (although one should be aware that one can reduce the tension with a trivial modification of the model, by allowing the dark photon to decay into the hidden sector).

So, no luck so far, we need to search further. What one should retain is that finding new heavy particles and finding new light weakly interacting particles seems equally probable at this point :)

Monday, 2 June 2014

Another one bites the dust...

...though it's not BICEP2 this time :) This is a long overdue update on the forward-backward asymmetry of the top quark production.