Another year gone. In history books it will be marked as the year of the first LHC collisions, and in a few years noone will remember that 2.36$\ll$14. Meantime particle theorists have dwelled mostly on the dark side. The great expectations on the part of the Fermi experiment have not been fulfilled so far: their result may contain interesting physics but at the moment they are far from conclusive. The CDMS bubble inflated by certain irresponsible bloggers ;) has burst last week with a loud smack. In short, we remain in darkness.

This is the last post this year. While you gobble I vanish into jungle. Will be back next year, with more reckless rumors.

Wednesday, 23 December 2009

Thursday, 17 December 2009

CDMS Live

- 16:41. Welcome to the live commentary from the CDMS seminar starting today at 2pm Pacific time at SLAC. It might be the event of the year, or the flop of the decade. Though most likely it will be a hint of the century.

- 16:50 Our seminar room is starting to get packed. The webcast from SLAC will be projected on a big screen.

- 17:00. The SLAC seminar has started! The speaker is Jodi Cooley.

- 17:06. Dark matter history. Zwicky, Ruben, rotation curves, bullet clusters. No way around it, we have to suffer through that....

- 17:09. There is also a webcast of the Fermilab seminar here. They are ahead of SLAC...

- 17:12. Now talking at length about WIMPs. Does it mean they see a vanilla-flavor WIMP?

- 17:14. A picture of a cow on one of the slides.

- 17:15. Fermilab already got to the gamma rejection. We are watching the wrong webcast, booo.

- 17:18. Jodi starts describing the CDMS experiment.

- 17:20. Previous CDMS results at Fermilab. They're getting close.

- 17:30. We switched to the Fermilab talk here in Rutgers. Now the speaker is Lauren Hsu. She seems faster.

- 17:35. Very technical details about phonon timing and data quality monitoring.

- 17:38. Expected backgrounds. Finally some important details.

- 17:39. Estimated cosmogenic neutron background 0.04, and similarly for radiogenic ones.

- 17:40. Surface event background estimated at 0.6.

- 17:45. They are talking about expected limits. Scaring. They don't have a signal? If there were no signal they would obtain two times better bounds than the last time.

- 17:47. Rumors reaching me, of 2 events at 11 and 15 keV.

- 17:49. It's official: 2 events. One at 12 keV, the other at 15 kev.

- 17:49. There are additional 2 events very close to the cut window, approximately at 12 keV.

- 17:58. Now discussing the post-unblinding analysis and the statistical significance.

- 18:00. Both events were registered on weekends. Grad students having parties?

- 18:01. The significance of the signal is less than two sigma.

- 18:04. One of the events has something suspicious with the charge pulse. A long discussion unfolds.

- 18:12. After post-unblinding analysis the signal significance drops to 1.5sigma (23 percent probability of the background fluctuation).

- 18:14. The new limits on dark matter $4x10^{-44} cm^2$ for a 70 GeV WIMP. Slightly better (factor 1.5) than the last ones.

- 18:17. Inelastic dark matter interpretation of the DAMA signal is not excluded by the new CDMS data.

- 18:18. Nearing the end. The speaker discusses super-CDMS, the possible future upgrade of the experiment.

- 18:20. Summarizing, no discovery. Just a hint of a signal but with a very low statistical significance. Was fun anyway.

- 18:20. So much for now. Good night and good luck. The first theory papers should appear on Monday.

Wednesday, 16 December 2009

A little update on CDMS

I guess I owe you a short summary to straighten out what I messed up. This Thursday CDMS is going to announce their new results on dark matter detection based on the 2008 and 2009 runs. The collaboration has scheduled two simultaneous talks, one in Fermilab and one in SLAC, for 5pm Eastern time (23:00 in Europe). The SLAC talk will be webcasted here. An ArXiv paper is also promised, and it will probably get  posted Thursday evening. These facts are based on the news from the official CDMS page, so they may turn out to be facts after all, unlike the previous facts I called facts even though they were unfacts :-)

posted Thursday evening. These facts are based on the news from the official CDMS page, so they may turn out to be facts after all, unlike the previous facts I called facts even though they were unfacts :-)

Having rendered unto Caesar, I can go on indulging in completely unfounded speculations. It is pretty clear that no discovery will be announced this week, in the formal scientific sense of the word "discovery". Earlier expectations of a discovery that I was reporting on were based on the rumors of a CDMS paper accepted in Nature, which turned out to be completely false.

Moreover, some CDMS members seem to play down the hopes. But the secrecy surrounding the announcement of the new results may suggest that CDMS has seen at least a hint of a signal:

not enough for a 3 sigma evidence, but enough to send us all into an excited state.

So I see two possible scenarios:

posted Thursday evening. These facts are based on the news from the official CDMS page, so they may turn out to be facts after all, unlike the previous facts I called facts even though they were unfacts :-)

posted Thursday evening. These facts are based on the news from the official CDMS page, so they may turn out to be facts after all, unlike the previous facts I called facts even though they were unfacts :-)Having rendered unto Caesar, I can go on indulging in completely unfounded speculations. It is pretty clear that no discovery will be announced this week, in the formal scientific sense of the word "discovery". Earlier expectations of a discovery that I was reporting on were based on the rumors of a CDMS paper accepted in Nature, which turned out to be completely false.

Moreover, some CDMS members seem to play down the hopes. But the secrecy surrounding the announcement of the new results may suggest that CDMS has seen at least a hint of a signal:

not enough for a 3 sigma evidence, but enough to send us all into an excited state.

So I see two possible scenarios:

- Scenario #1

CDMS has detected 2-3 events with the expected background of order 0.5. All eyes will turn to XENON100 - a more sensitive direct detection experiment that is kicking off as we speak - who should provide the definitive answer by the next summer. In the meantime, theorists will produce a zillion of papers fitting their favorite recoil spectrum to the 3 events. - Scenario #2

All this secrecy was just smoke and mirrors. CDMS has found 0 or 1 events, thus setting the best bounds so far on the dark matter-nucleon cross section. Given the expectations they raised in the physics community, the Thursday speakers will be torn to pieces by an angry mob, and their bones will be thrown to undergrads.

Wednesday, 9 December 2009

Go LHC!

Monday, 7 December 2009

What the hell is going on in CDMS???

The essence of blogging is of course spreading wild rumors. This one is definitely the wildest ever. The particle community is bustling with rumors of a possible discovery of dark matter in CDMS.

CDMS is an experiment located underground in the Soudan mine in Minnesota. It consists of two dozens of germanium and silicon ice-hockey pucks cooled down to 40 mK. When a particle hits the detector it produces both phonons and ionization, and certain tell-tale features of these two signals allow the experimenters to sort out electron events (expected to be produced by mundane background processes) from nuclear recoils (expected to be produced by scattering of dark matter particles, as the apparatus is well shielded from ordinary nucleons). The last analysis, published early 2008, was based on a data set collected in the years 2006--2007. After applying blind cuts they saw zero events that look like nuclear recoils, which allowed them to set the best limits so far on the scattering cross section of a garden variety WIMP (for WIMPs lighter than 60 GeV the bounds from another experiment called XENON10 are slightly better).

analysis, published early 2008, was based on a data set collected in the years 2006--2007. After applying blind cuts they saw zero events that look like nuclear recoils, which allowed them to set the best limits so far on the scattering cross section of a garden variety WIMP (for WIMPs lighter than 60 GeV the bounds from another experiment called XENON10 are slightly better).

By now CDMS must have acquired four times more data. The new data set was supposed to be unblinded some time last autumn, and the new improved limits should have been published by now. They were not.

And then...

Important update: I just received this in an email:

CDMS is an experiment located underground in the Soudan mine in Minnesota. It consists of two dozens of germanium and silicon ice-hockey pucks cooled down to 40 mK. When a particle hits the detector it produces both phonons and ionization, and certain tell-tale features of these two signals allow the experimenters to sort out electron events (expected to be produced by mundane background processes) from nuclear recoils (expected to be produced by scattering of dark matter particles, as the apparatus is well shielded from ordinary nucleons). The last

analysis, published early 2008, was based on a data set collected in the years 2006--2007. After applying blind cuts they saw zero events that look like nuclear recoils, which allowed them to set the best limits so far on the scattering cross section of a garden variety WIMP (for WIMPs lighter than 60 GeV the bounds from another experiment called XENON10 are slightly better).

analysis, published early 2008, was based on a data set collected in the years 2006--2007. After applying blind cuts they saw zero events that look like nuclear recoils, which allowed them to set the best limits so far on the scattering cross section of a garden variety WIMP (for WIMPs lighter than 60 GeV the bounds from another experiment called XENON10 are slightly better).By now CDMS must have acquired four times more data. The new data set was supposed to be unblinded some time last autumn, and the new improved limits should have been published by now. They were not.

And then...

- Fact #1: CDMS submitted a paper to Nature, and they were recently accepted. The paper is embargoed until December 18 (embargo is one of these relics of the last century that somehow persists until today, along with North Korea and Michael Jackson fans) - the collaboration is not allowed to speak publicly about its content. Consequently, CDMS has canceled all seminars scheduled before December 18.

- Fact #2: A film crew that was supposed to make a reportage from unblinding the CDMS data was called off shortly before the scheduled date. They were told to come back in January, when the unblinding will be restaged.

- Theory #1: The common lore is that particle physics papers appearing in Nature (the magazine, not the bitch) are those claiming a discovery. It is not at all impossible that the new data set contains enough events for an evidence or even a discovery. If the zero events in the previous CDMS paper was a downward fluke, several WIMP events could readily occur in the new data. Furthermore, in some fancy theories like inelastic dark matter, a large number of WIMP scattering events is conceivable because the new data were collected in summer when the wind is favorable.

- Theory #2: Data-starved particle theorists once again are freaking out for no reason. There is no discovery; CDMS will just publish their new, improved bounds on the scattering cross section of dark matter. CDMS is acting strangely because they want to draw attention: the experimental community is turning toward noble liquid technologies and funding of solid-state detectors like the one in CDMS is endangered.

Important update: I just received this in an email:

It is still true that the new CDMS data are scheduled to be released on December 18th, and there will be presentations in a number of labs around the world. But if there's no Nature paper then theory #2 becomes far more likely.I was alerted to your blog of yesterday (you certainly don't make contacting you easy). Your "fact" #1, that Nature is about to publish a CDMS paper on dark matter, is completely false. This would be instantly obvious to the most casual observer because the purported date of publication is a Friday, and Nature is published on Thursdays. Your "fact" therefore contains as much truth as the average Fox News story, and I would be grateful if you would correct it immediately. Your comments about the embargo are therefore, within this context, ridiculous. Peer review is a process, the culmination of which is publication. We regard confidentiality of results during the process as a matter of professional ethics, though of course authors are free to post to arxiv at any point during the process (we will not interfere with professional communication of results to peers). Dr Leslie Sage Senior editor, physical sciences Nature

Sunday, 6 December 2009

Back on track... what's next?

Unless you just came back from a trip to Jupiter moons you know that the LHC is up and running again. This time, each commissioning step can be followed in real time on blogs, facebook, or twitter, which demonstrates that particle physics has made a huge leap since the last year, at least in technological awareness. After the traumatic events of the last Fall (LHC meltdown, moving to New Jersey) I'm still a little cautious to wag my tail. But the LHC is back on track, no doubt about it, and one small step at a time we will reach high energy collisions early next year.

So what does this mean, in practice?

In the long run - everything.

In the short run - nothing.

The long run stands for 3-4 years. By that time, the LHC should acquire enough data for us to understand the mechanism of electroweak symmetry breaking. Most likely, Higgs will be chased down and its mass and some of its properties will be measured. If there is new physics at the TeV scale we will have some general idea of its shape, even if many details will still be unclear. The majority (all?) of currently fashionable theories will be flushed down the toilet, and the world will never be the same again.

In the short run, though, nothing much is bound to happen. In 2010 the LHC will run at 7 TeV center of mass energy (birds singing that there won't be an energy upgrade next year), which makes its reach rather limited. Furthermore, the 100 inverse picobarns that the LHC is planning produce next year is just one hundredth of what Tevatron will have acquired by the end of the end of 2010. These two facts will make it very hard to beat the existing constraints from Tevatron, unless in some special, very lucky circumstances. So the entire 2010 is going to be an engineering run; things will start to rock only in 2012, after the shutdown in 2011 brings the energy up to 10 TeV and increases the luminosity.

Unless.

Unless, by the end of 2010 Tevatron has a 2-3 sigma indication of a light Higgs boson. In that case, I imagine, CERN might consider the nuclear option. The energy is not that crucial for chasing a light Higgs - the production cross section at 7 TeV is only a factor of two smaller than at 10 TeV, and moreover the background at 7 TeV is also smaller. So CERN might decide to postpone the long shutdown and continue running at 7 TeV for as long as it takes to outrace Tevatron and claim the Higgs discovery. That scenario is not impossible in my opinion, and it would be very attractive for bloggers because it promises blood. But even that, in any case, is more than one year away.

In the meantime, we have to look elsewhere for excitement. Maybe the old creaking Tevatron will draw the lucky number? Or maybe astrophysicists will find a smoking gun in the sky? Or, as I was dreaming early this year, the dark matter particle will be detected. Or maybe it already was? But that wild rumor deserves a separate post ;-)

So what does this mean, in practice?

In the long run - everything.

In the short run - nothing.

The long run stands for 3-4 years. By that time, the LHC should acquire enough data for us to understand the mechanism of electroweak symmetry breaking. Most likely, Higgs will be chased down and its mass and some of its properties will be measured. If there is new physics at the TeV scale we will have some general idea of its shape, even if many details will still be unclear. The majority (all?) of currently fashionable theories will be flushed down the toilet, and the world will never be the same again.

In the short run, though, nothing much is bound to happen. In 2010 the LHC will run at 7 TeV center of mass energy (birds singing that there won't be an energy upgrade next year), which makes its reach rather limited. Furthermore, the 100 inverse picobarns that the LHC is planning produce next year is just one hundredth of what Tevatron will have acquired by the end of the end of 2010. These two facts will make it very hard to beat the existing constraints from Tevatron, unless in some special, very lucky circumstances. So the entire 2010 is going to be an engineering run; things will start to rock only in 2012, after the shutdown in 2011 brings the energy up to 10 TeV and increases the luminosity.

Unless.

Unless, by the end of 2010 Tevatron has a 2-3 sigma indication of a light Higgs boson. In that case, I imagine, CERN might consider the nuclear option. The energy is not that crucial for chasing a light Higgs - the production cross section at 7 TeV is only a factor of two smaller than at 10 TeV, and moreover the background at 7 TeV is also smaller. So CERN might decide to postpone the long shutdown and continue running at 7 TeV for as long as it takes to outrace Tevatron and claim the Higgs discovery. That scenario is not impossible in my opinion, and it would be very attractive for bloggers because it promises blood. But even that, in any case, is more than one year away.

In the meantime, we have to look elsewhere for excitement. Maybe the old creaking Tevatron will draw the lucky number? Or maybe astrophysicists will find a smoking gun in the sky? Or, as I was dreaming early this year, the dark matter particle will be detected. Or maybe it already was? But that wild rumor deserves a separate post ;-)

Thursday, 19 November 2009

Fermi says "nothing"...like sure sure?

I wrote recently about a couple of theory groups who claim to have discovered intriguing signals in the gamma-ray data acquired by the Fermi satellite. The Fermi collaboration hastened to trash both these signals, visibly annoyed by pesky theorists meddling in their affairs. Therefore a status update is in order. Then I'll move to realizing the holy mission of yellow blogs, which is spreading wild rumors.

The first of the theorist's claims concerned the gamma-ray excess from the galactic center, allegedly consistent with a 30 GeV dark matter particle annihilating into b-quark pairs. The relevant data are displayed on this plot released recently by Fermi, which shows the gamma-ray spectrum in the seven-by-seven degrees patch around the galactic center. There indeed seems to be an excess in the 2-4 GeV region. However, given the size of the error bars and of the systematic uncertainties, not to mention how badly we understand the astrophysical processes in the galactic center, one can safely say that there is nothing to be excited about for the moment.

relevant data are displayed on this plot released recently by Fermi, which shows the gamma-ray spectrum in the seven-by-seven degrees patch around the galactic center. There indeed seems to be an excess in the 2-4 GeV region. However, given the size of the error bars and of the systematic uncertainties, not to mention how badly we understand the astrophysical processes in the galactic center, one can safely say that there is nothing to be excited about for the moment.

The status of the Fermi haze is far less clear. Here is the story so far. In a recent paper, Doug Finkbeiner and collaborators looked into the Fermi gamma-ray data and found an evidence for a population of very energetic electrons and positrons in the center of our galaxy. These electrons would emit gamma rays when colliding with starlight, in the process known as inverse Compton scattering. They would also emit microwave photons via synchrotron radiation, of which hints are present in the WMAP data. The high-energy electrons could plausibly be a sign of dark matter activity, and fit very well with the PAMELA positron excess, although one cannot exclude that they are produced by conventional astrophysical processes. But Fermi argues that there is no haze in their data. During the Fermi Symposium last week the collaboration was chanting anti-haze songs and tarred-and-feathered anyone humming Hazy shade of winter. Interestingly, it seems that each collaboration member has a slightly different reasons for doubts. Some say the haze is just heavy cosmic-ray elements faking gamma-ray photons. Some say the haze does exist but it can be easily explained by tuned-up galactic models without invoking an energetic population of electrons. Some say the haze is LOOP-1 - a nearby supernova remnant that happens to lie roughly in the direction of the galactic center. But none of the above explanations seems to be on a firm footing, and the jury is definitely out. In the worst case, the matter should be clarified by the Planck satellite (already up in the sky) who is going to make more accurate maps of photon emission at lower frequencies that will lead to a better understanding of astrophysical backgrounds.

population of very energetic electrons and positrons in the center of our galaxy. These electrons would emit gamma rays when colliding with starlight, in the process known as inverse Compton scattering. They would also emit microwave photons via synchrotron radiation, of which hints are present in the WMAP data. The high-energy electrons could plausibly be a sign of dark matter activity, and fit very well with the PAMELA positron excess, although one cannot exclude that they are produced by conventional astrophysical processes. But Fermi argues that there is no haze in their data. During the Fermi Symposium last week the collaboration was chanting anti-haze songs and tarred-and-feathered anyone humming Hazy shade of winter. Interestingly, it seems that each collaboration member has a slightly different reasons for doubts. Some say the haze is just heavy cosmic-ray elements faking gamma-ray photons. Some say the haze does exist but it can be easily explained by tuned-up galactic models without invoking an energetic population of electrons. Some say the haze is LOOP-1 - a nearby supernova remnant that happens to lie roughly in the direction of the galactic center. But none of the above explanations seems to be on a firm footing, and the jury is definitely out. In the worst case, the matter should be clarified by the Planck satellite (already up in the sky) who is going to make more accurate maps of photon emission at lower frequencies that will lead to a better understanding of astrophysical backgrounds.

And now wild rumors... which, let's make it clear, are likely due to daydreaming over-imagination of data-hungry theorists. The rumors concern Fermi's search for subhalos, which is one of the most promising methods of detecting dark matter in the sky. Subhalos are dwarf galaxies orbiting our Milky Way who are made almost entirely of dark matter. Two dozens of subhalos have been discovered so far (by observing small clumps of stars that they host) but simulations predict several hundreds of these objects. The darkest of the discovered subhalos has a mass-to-light ratio larger than a thousand, indicating large concentration of dark matter. Because of that, one expects dark matter particles to efficiently annihilate and emit gamma rays (typically, via final state radiation or inverse Compton scattering of the annihilation products). Although the resulting gamma-ray flux is expected to be smaller than that from the galactic center, the subhalos with its small visible matter content offer a much cleaner environment to search for a signal.

So, Fermi is searching for spatially extended object away from the galactic plane that steadily emit a lot of gamma rays but are not visible in other frequencies. The results based on 10-months data have been presented in this poster. Apparently, they found no less than four candidates at the 5-sigma level!!! However, according to the poster, these candidates do not fit the spectra of three random dark matter models. For this reason, the conclusion of the search is that no subhalos have been detected, even though it is not clear what astrophysical processes could produce the signal they have found.

Well, I bet an average theorist would need fifteen minutes to write down a dark matter model fitting whatever spectrum Fermi has measured. On the other hand, the collaboration must have better reasons, not revealed to us mortals, to ditch the candidates they have found. On yet another hand, the fact that Fermi is not revealing the positions and the measured spectra of these four candidates makes the matter very very intriguing. So, we need to wait for more data. Or for a snitch :-)

The first of the theorist's claims concerned the gamma-ray excess from the galactic center, allegedly consistent with a 30 GeV dark matter particle annihilating into b-quark pairs. The

relevant data are displayed on this plot released recently by Fermi, which shows the gamma-ray spectrum in the seven-by-seven degrees patch around the galactic center. There indeed seems to be an excess in the 2-4 GeV region. However, given the size of the error bars and of the systematic uncertainties, not to mention how badly we understand the astrophysical processes in the galactic center, one can safely say that there is nothing to be excited about for the moment.

relevant data are displayed on this plot released recently by Fermi, which shows the gamma-ray spectrum in the seven-by-seven degrees patch around the galactic center. There indeed seems to be an excess in the 2-4 GeV region. However, given the size of the error bars and of the systematic uncertainties, not to mention how badly we understand the astrophysical processes in the galactic center, one can safely say that there is nothing to be excited about for the moment.The status of the Fermi haze is far less clear. Here is the story so far. In a recent paper, Doug Finkbeiner and collaborators looked into the Fermi gamma-ray data and found an evidence for a

population of very energetic electrons and positrons in the center of our galaxy. These electrons would emit gamma rays when colliding with starlight, in the process known as inverse Compton scattering. They would also emit microwave photons via synchrotron radiation, of which hints are present in the WMAP data. The high-energy electrons could plausibly be a sign of dark matter activity, and fit very well with the PAMELA positron excess, although one cannot exclude that they are produced by conventional astrophysical processes. But Fermi argues that there is no haze in their data. During the Fermi Symposium last week the collaboration was chanting anti-haze songs and tarred-and-feathered anyone humming Hazy shade of winter. Interestingly, it seems that each collaboration member has a slightly different reasons for doubts. Some say the haze is just heavy cosmic-ray elements faking gamma-ray photons. Some say the haze does exist but it can be easily explained by tuned-up galactic models without invoking an energetic population of electrons. Some say the haze is LOOP-1 - a nearby supernova remnant that happens to lie roughly in the direction of the galactic center. But none of the above explanations seems to be on a firm footing, and the jury is definitely out. In the worst case, the matter should be clarified by the Planck satellite (already up in the sky) who is going to make more accurate maps of photon emission at lower frequencies that will lead to a better understanding of astrophysical backgrounds.

population of very energetic electrons and positrons in the center of our galaxy. These electrons would emit gamma rays when colliding with starlight, in the process known as inverse Compton scattering. They would also emit microwave photons via synchrotron radiation, of which hints are present in the WMAP data. The high-energy electrons could plausibly be a sign of dark matter activity, and fit very well with the PAMELA positron excess, although one cannot exclude that they are produced by conventional astrophysical processes. But Fermi argues that there is no haze in their data. During the Fermi Symposium last week the collaboration was chanting anti-haze songs and tarred-and-feathered anyone humming Hazy shade of winter. Interestingly, it seems that each collaboration member has a slightly different reasons for doubts. Some say the haze is just heavy cosmic-ray elements faking gamma-ray photons. Some say the haze does exist but it can be easily explained by tuned-up galactic models without invoking an energetic population of electrons. Some say the haze is LOOP-1 - a nearby supernova remnant that happens to lie roughly in the direction of the galactic center. But none of the above explanations seems to be on a firm footing, and the jury is definitely out. In the worst case, the matter should be clarified by the Planck satellite (already up in the sky) who is going to make more accurate maps of photon emission at lower frequencies that will lead to a better understanding of astrophysical backgrounds.And now wild rumors... which, let's make it clear, are likely due to daydreaming over-imagination of data-hungry theorists. The rumors concern Fermi's search for subhalos, which is one of the most promising methods of detecting dark matter in the sky. Subhalos are dwarf galaxies orbiting our Milky Way who are made almost entirely of dark matter. Two dozens of subhalos have been discovered so far (by observing small clumps of stars that they host) but simulations predict several hundreds of these objects. The darkest of the discovered subhalos has a mass-to-light ratio larger than a thousand, indicating large concentration of dark matter. Because of that, one expects dark matter particles to efficiently annihilate and emit gamma rays (typically, via final state radiation or inverse Compton scattering of the annihilation products). Although the resulting gamma-ray flux is expected to be smaller than that from the galactic center, the subhalos with its small visible matter content offer a much cleaner environment to search for a signal.

So, Fermi is searching for spatially extended object away from the galactic plane that steadily emit a lot of gamma rays but are not visible in other frequencies. The results based on 10-months data have been presented in this poster. Apparently, they found no less than four candidates at the 5-sigma level!!! However, according to the poster, these candidates do not fit the spectra of three random dark matter models. For this reason, the conclusion of the search is that no subhalos have been detected, even though it is not clear what astrophysical processes could produce the signal they have found.

Well, I bet an average theorist would need fifteen minutes to write down a dark matter model fitting whatever spectrum Fermi has measured. On the other hand, the collaboration must have better reasons, not revealed to us mortals, to ditch the candidates they have found. On yet another hand, the fact that Fermi is not revealing the positions and the measured spectra of these four candidates makes the matter very very intriguing. So, we need to wait for more data. Or for a snitch :-)

Saturday, 7 November 2009

Higgs chased away from another hole

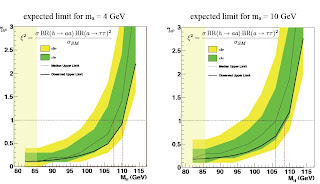

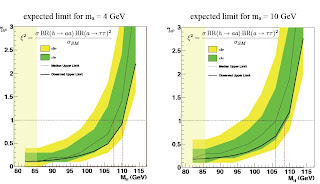

The hunt for the Higgs continues. Tevatron is running at full steam hoping to catch a glimpse of the sucker before the LHC joins in the game. If the standard model is correct, the entire range of allowed Higgs masses will be covered within next 3-4 years. But there is one disturbing puzzle: indirect measurement indicate that we should have already found the Higgs! Indeed, precision measurements at LEP and Tevatron - mostly lepton asymmetries of Z decay and the value of the W boson mass - are best explained if the Higgs mass is some 80-90 GeV, whereas the direct limit from LEP implies that it must be heavier than 115 GeV.

There is one more reason, this time purely theoretical, to expect that the Higgs may be lighter than the naive LEP bound. If supersymmetry is relevant at the weak scale it is in general very uncomfortable with a heavy Higgs. Well, they keep telling you that the upper limit in the MSSM is 130 GeV. But that requires stretching the parameters of the model to the point of breaking, while the natural prediction is 90-100 GeV. Indeed, not finding the Higgs at LEP is probably the primary reason to disbelieve that supersymmetry is relevant at low energies.

Is it possible that Higgs is lighter than 115 GeV and LEP missed it? The answer is yes, because the LEP searches have left many loopholes. Sensitivity of LEP analyses deteriorates if the Higgs decays into a many-body final state, which is possible in some extensions of the standard model. One popular theory where this could happen is the NMSSM - the 2.0 version of the MSSM with an additional singlet. Roughly, the Higgs could first decay into the new singlet, who in turn decays into two tau leptons, which amounts to Higgs decaying into four tau leptons. This funny decay topology could escape LEP searches even if the Higgs is as light as 86 GeV! That is the case not because of deep physical reasons, but simply because LEP collaborations were too lazy to search for it (in comparison, Higgs decaying into four b-quarks, which was studied by LEP, is excluded for the Higgs mass up to 110 GeV).

decays into a many-body final state, which is possible in some extensions of the standard model. One popular theory where this could happen is the NMSSM - the 2.0 version of the MSSM with an additional singlet. Roughly, the Higgs could first decay into the new singlet, who in turn decays into two tau leptons, which amounts to Higgs decaying into four tau leptons. This funny decay topology could escape LEP searches even if the Higgs is as light as 86 GeV! That is the case not because of deep physical reasons, but simply because LEP collaborations were too lazy to search for it (in comparison, Higgs decaying into four b-quarks, which was studied by LEP, is excluded for the Higgs mass up to 110 GeV).

But not anymore - this particular gaping hole has been recently sealed. A group of brave adventure-seekers ventured into CERN caverns, excavated the ancient LEP data and analyzed them lookig for the Higgs-to-4tau signal. The results were presented this week at the ALEPH meeting celebrating the 20th anniversary and 9th anniversary of its demise. Of course, there is nothing there, in case you had any doubts. The new limit for the Higgs-to-4tau channel excludes the Higgs mass smaller than 105-110 GeV. Yet the beautiful thing in that analysis is that going back to the LEP data is still possible, if only there is reason, and will, and cheap work force.

So, is the idea of the hidden light Higgs dead? It has definitely received a serious blow, but it can still survive in some perverse models where Higgs decays into four light jets, at least until someone ventures to kill that too. Anyway, never say dead; there is no experimental results that theorists could not find a way around ;-)

So, is the idea of the hidden light Higgs dead? It has definitely received a serious blow, but it can still survive in some perverse models where Higgs decays into four light jets, at least until someone ventures to kill that too. Anyway, never say dead; there is no experimental results that theorists could not find a way around ;-)

There is one more reason, this time purely theoretical, to expect that the Higgs may be lighter than the naive LEP bound. If supersymmetry is relevant at the weak scale it is in general very uncomfortable with a heavy Higgs. Well, they keep telling you that the upper limit in the MSSM is 130 GeV. But that requires stretching the parameters of the model to the point of breaking, while the natural prediction is 90-100 GeV. Indeed, not finding the Higgs at LEP is probably the primary reason to disbelieve that supersymmetry is relevant at low energies.

Is it possible that Higgs is lighter than 115 GeV and LEP missed it? The answer is yes, because the LEP searches have left many loopholes. Sensitivity of LEP analyses deteriorates if the Higgs

decays into a many-body final state, which is possible in some extensions of the standard model. One popular theory where this could happen is the NMSSM - the 2.0 version of the MSSM with an additional singlet. Roughly, the Higgs could first decay into the new singlet, who in turn decays into two tau leptons, which amounts to Higgs decaying into four tau leptons. This funny decay topology could escape LEP searches even if the Higgs is as light as 86 GeV! That is the case not because of deep physical reasons, but simply because LEP collaborations were too lazy to search for it (in comparison, Higgs decaying into four b-quarks, which was studied by LEP, is excluded for the Higgs mass up to 110 GeV).

decays into a many-body final state, which is possible in some extensions of the standard model. One popular theory where this could happen is the NMSSM - the 2.0 version of the MSSM with an additional singlet. Roughly, the Higgs could first decay into the new singlet, who in turn decays into two tau leptons, which amounts to Higgs decaying into four tau leptons. This funny decay topology could escape LEP searches even if the Higgs is as light as 86 GeV! That is the case not because of deep physical reasons, but simply because LEP collaborations were too lazy to search for it (in comparison, Higgs decaying into four b-quarks, which was studied by LEP, is excluded for the Higgs mass up to 110 GeV).But not anymore - this particular gaping hole has been recently sealed. A group of brave adventure-seekers ventured into CERN caverns, excavated the ancient LEP data and analyzed them lookig for the Higgs-to-4tau signal. The results were presented this week at the ALEPH meeting celebrating the 20th anniversary and 9th anniversary of its demise. Of course, there is nothing there, in case you had any doubts. The new limit for the Higgs-to-4tau channel excludes the Higgs mass smaller than 105-110 GeV. Yet the beautiful thing in that analysis is that going back to the LEP data is still possible, if only there is reason, and will, and cheap work force.

So, is the idea of the hidden light Higgs dead? It has definitely received a serious blow, but it can still survive in some perverse models where Higgs decays into four light jets, at least until someone ventures to kill that too. Anyway, never say dead; there is no experimental results that theorists could not find a way around ;-)

So, is the idea of the hidden light Higgs dead? It has definitely received a serious blow, but it can still survive in some perverse models where Higgs decays into four light jets, at least until someone ventures to kill that too. Anyway, never say dead; there is no experimental results that theorists could not find a way around ;-)

Friday, 30 October 2009

Hail to Freedom

Experimental collaborations display vastly different attitudes toward sharing their data. In my previous post I described an extreme approach bordering on schizophrenia. On the other end of the spectrum is the Fermi collaboration (hail to Fermi). After one year of taking and analyzing data they posted on a public website the energy and direction of every gamma-ray photon they had detected. This is of course the standard procedure for all missions funded by NASA (hail to NASA). Now everybody, from a farmer in the Guangxi province to a professor in Harvard, has a chance to search for dark matter using real data.

The release of the Fermi data has already spawned two independent analyses by theorists. One is being widely discussed on blogs (here and here) and in popular magazines, whereas the other paper passed rather unnoticed. Both papers claim to have discovered an effect overlooked by the Fermi collaboration, and both hint to dark matter as the origin.

The first (chronologically, the second) of the two papers provides a new piece of evidence that the center of our galaxy hosts the so-called haze - a population of hard electrons (and/or positrons) whose spectrum is difficult to explain by conventional astrophysical processes. The haze was first observed by Jimi Hendrix ('Scuse me while I kiss the sky). Later, Doug Finkbeiner came across the haze when analyzing maps of cosmic microwave radiation provided by WMAP; in fact, that was also an independent analysis of publicly released data (hail to WMAP). The WMAP haze is supposedly produced by synchrotron radiation of the electrons . But the same electrons should also produce gamma rays when interacting with the interstellar light in the process known as the inverse Compton scattering (Inverse Compton was the younger brother of Arthur), the ICS in short. The claim is that Fermi has detected these ICS photons. You can even see it yourself if you stare long enough into the picture.

. But the same electrons should also produce gamma rays when interacting with the interstellar light in the process known as the inverse Compton scattering (Inverse Compton was the younger brother of Arthur), the ICS in short. The claim is that Fermi has detected these ICS photons. You can even see it yourself if you stare long enough into the picture.

The second paper also takes a look at the gamma rays arriving from the the galactic center, but uncovers a completely different signature. There seems to be a bumpy feature around a few GeV that does not fit a simple power-law spectrum expected from the background. The paper says that a dark matter particle of mass around 30 GeV annihilating into b quark pairs can fit the bump. The required annihilation cross section is fairly low, of order $10^{-25} cm^3/s$, only a factor of 3 larger than that needed to explain the observed abundance of dark matter via a thermal relic. That would put this dark matter particle closer to a standard WIMP, as opposed to the recently popular dark matter particles designed to explain the PAMELA positron excess who need a much larger mass and cross section.

that does not fit a simple power-law spectrum expected from the background. The paper says that a dark matter particle of mass around 30 GeV annihilating into b quark pairs can fit the bump. The required annihilation cross section is fairly low, of order $10^{-25} cm^3/s$, only a factor of 3 larger than that needed to explain the observed abundance of dark matter via a thermal relic. That would put this dark matter particle closer to a standard WIMP, as opposed to the recently popular dark matter particles designed to explain the PAMELA positron excess who need a much larger mass and cross section.

Sadly, collider physics has a long way to go before reaching the same level of openness. Although collider experiments are 100% financed by public funds, and although acquired data have no commercial value, the data remains a property of the collaboration without ever being publicly released, not even after the collaboration has dissolved into nothingness. The only logical reason to explain that is inertia - a quick and easy access to data and analysis tools has only quite recently become available to everybody. Another argument raised on that occasion is that only the collaboration who produced the data is able to understand and properly handle them. That is of course irrelevant. Surely, the collaboration can make any analysis ten times better and more reliably. However, some analyses are simply never done either due to lack of manpower or laziness, and others are marred by theoretical prejudices. The LEP experiment is a perfect example here. Several important searches have never been done because, at the time, there was no motivation from popular theories. In particular, it is not excluded that the Higgs boson exist with a mass accessible to LEP (that is less than 115 GeV), but it was missed because some possible decay channels have not been studied. It may well be that ground breaking discoveries are stored on the LEP tapes rotting on dusty shelves in CERN catacombs. That danger could be easily avoided if the LEP data were publicly available in an accessible form.

In the end, what do we have to lose? In the worst case scenario, the unrestricted access to data will just lead to more entries in my blog ;-)

Update: At the FERMI Symposium this week in Washington the collaboration trashed both of the above dark matter claims.

The release of the Fermi data has already spawned two independent analyses by theorists. One is being widely discussed on blogs (here and here) and in popular magazines, whereas the other paper passed rather unnoticed. Both papers claim to have discovered an effect overlooked by the Fermi collaboration, and both hint to dark matter as the origin.

The first (chronologically, the second) of the two papers provides a new piece of evidence that the center of our galaxy hosts the so-called haze - a population of hard electrons (and/or positrons) whose spectrum is difficult to explain by conventional astrophysical processes. The haze was first observed by Jimi Hendrix ('Scuse me while I kiss the sky). Later, Doug Finkbeiner came across the haze when analyzing maps of cosmic microwave radiation provided by WMAP; in fact, that was also an independent analysis of publicly released data (hail to WMAP). The WMAP haze is supposedly produced by synchrotron radiation of the electrons

. But the same electrons should also produce gamma rays when interacting with the interstellar light in the process known as the inverse Compton scattering (Inverse Compton was the younger brother of Arthur), the ICS in short. The claim is that Fermi has detected these ICS photons. You can even see it yourself if you stare long enough into the picture.

. But the same electrons should also produce gamma rays when interacting with the interstellar light in the process known as the inverse Compton scattering (Inverse Compton was the younger brother of Arthur), the ICS in short. The claim is that Fermi has detected these ICS photons. You can even see it yourself if you stare long enough into the picture.The second paper also takes a look at the gamma rays arriving from the the galactic center, but uncovers a completely different signature. There seems to be a bumpy feature around a few GeV

that does not fit a simple power-law spectrum expected from the background. The paper says that a dark matter particle of mass around 30 GeV annihilating into b quark pairs can fit the bump. The required annihilation cross section is fairly low, of order $10^{-25} cm^3/s$, only a factor of 3 larger than that needed to explain the observed abundance of dark matter via a thermal relic. That would put this dark matter particle closer to a standard WIMP, as opposed to the recently popular dark matter particles designed to explain the PAMELA positron excess who need a much larger mass and cross section.

that does not fit a simple power-law spectrum expected from the background. The paper says that a dark matter particle of mass around 30 GeV annihilating into b quark pairs can fit the bump. The required annihilation cross section is fairly low, of order $10^{-25} cm^3/s$, only a factor of 3 larger than that needed to explain the observed abundance of dark matter via a thermal relic. That would put this dark matter particle closer to a standard WIMP, as opposed to the recently popular dark matter particles designed to explain the PAMELA positron excess who need a much larger mass and cross section.Sadly, collider physics has a long way to go before reaching the same level of openness. Although collider experiments are 100% financed by public funds, and although acquired data have no commercial value, the data remains a property of the collaboration without ever being publicly released, not even after the collaboration has dissolved into nothingness. The only logical reason to explain that is inertia - a quick and easy access to data and analysis tools has only quite recently become available to everybody. Another argument raised on that occasion is that only the collaboration who produced the data is able to understand and properly handle them. That is of course irrelevant. Surely, the collaboration can make any analysis ten times better and more reliably. However, some analyses are simply never done either due to lack of manpower or laziness, and others are marred by theoretical prejudices. The LEP experiment is a perfect example here. Several important searches have never been done because, at the time, there was no motivation from popular theories. In particular, it is not excluded that the Higgs boson exist with a mass accessible to LEP (that is less than 115 GeV), but it was missed because some possible decay channels have not been studied. It may well be that ground breaking discoveries are stored on the LEP tapes rotting on dusty shelves in CERN catacombs. That danger could be easily avoided if the LEP data were publicly available in an accessible form.

In the end, what do we have to lose? In the worst case scenario, the unrestricted access to data will just lead to more entries in my blog ;-)

Update: At the FERMI Symposium this week in Washington the collaboration trashed both of the above dark matter claims.

Tuesday, 27 October 2009

What's really behind DAMA

More than once I wrote in this blog about crazy theoretical ideas to explain the DAMA modulation signal. There is a good excuse. In this decade, DAMA has been the main source of inspiration to extend dark matter model building beyond the simple WIMP paradigm, in particular inelastic dark matter was conceived that way. This in turn has prompted to tighten the net of experimental searches to include signals from non-WIMP dark matter particles. More importantly, blog readers always require sensation, scandal and blood (I know, I'm a reader myself), so that spectacular new physics explanations are always preferred. Nevertheless, prompted by a commenter, I thought it might be useful to balance a bit and describe a more trivial explanation of the DAMA signal that involves a systematic effect rather than dark matter particles.

Unlike most dark matter detection experiments, the DAMA instrument has no sophisticated background rejection (they only reject coincident hits in multiple crystals). That might be an asset, because they are a priori sensitive to a variety of dark matter particles, whether scattering elastically or inelastically, whether scattering on nucleons or electrons, and so on. But at the same time most of their hits comes from mundane and poorly controlled sources such as natural radioactivity, which makes them vulnerable unknown or underestimated backgrounds.

One important source of the background is a contamination of DAMA's sodium-iodine crystals with radioactive elements like Uranium 238, Iodine 129 and Potassium 40. The last one is the main culprit because some of its decay products have the same energy as the putative DAMA signal. Potassium, being in the same Mendeleev column as sodium, can easily sneak into the lattice of the crystal. The radioactive isotope 40K is present with roughly 0.01 percent abundance in natural potassium. Ten percent of the times 40K decays to an excited state of Argon 40, which is followed by a de-excitation photon at 1.4 MeV and emission of Auger electrons with energy 3.2 keV. This process is known to occur in the DAMA detector with a sizable rate; in fact DAMA itself measured that background by looking for coincidences of MeV photons and 3 keV scintillation signals, see the plot above. That same background is also responsible for the little peak at 3keV in the single hit spectrum measured by DAMA, see below (note that this is not the modulated spectrum on which DAMA claim is based!). The peak here is exactly due to the Auger radiation.

with radioactive elements like Uranium 238, Iodine 129 and Potassium 40. The last one is the main culprit because some of its decay products have the same energy as the putative DAMA signal. Potassium, being in the same Mendeleev column as sodium, can easily sneak into the lattice of the crystal. The radioactive isotope 40K is present with roughly 0.01 percent abundance in natural potassium. Ten percent of the times 40K decays to an excited state of Argon 40, which is followed by a de-excitation photon at 1.4 MeV and emission of Auger electrons with energy 3.2 keV. This process is known to occur in the DAMA detector with a sizable rate; in fact DAMA itself measured that background by looking for coincidences of MeV photons and 3 keV scintillation signals, see the plot above. That same background is also responsible for the little peak at 3keV in the single hit spectrum measured by DAMA, see below (note that this is not the modulated spectrum on which DAMA claim is based!). The peak here is exactly due to the Auger radiation.

Now, look at the spectrum of the time dependent component of the signal where DAMA claims to have found evidence for dark matter. The peak of the annual modulation signal occurs precisely at 3 keV.The fact that the putative signal is on top of the known background is VERY suspi cious.

cious.

One should admit that it is not entirely clear what could cause the modulation of the background,

although some subtle annual effect affecting the efficiency for detecting the Auger radiation is not implausible. So far, DAMA has not shown any convincing arguments that would exclude 40K as the origin of their modulation signal.

Actually, it is easy to check whether it's 40K or not. Just put one of the DAMA crystals inside the environment where the efficiency for detecting the decay products of 40K is nearly 100 percent. Like for example, in the Borexino balloon that is waiting next door in the Gran Sasso Laboratory. In fact, the Borexino collaboration has made this very proposal to DAMA. The answer was a resounding no.

There is another way Borexino could quickly refute or confirm the DAMA claim. Why not buying the sodium-iodine crystals directly from Saint Gobain - the company that provided the crystals for DAMA? Not so fast. In the contract, DAMA has secured exclusive eternal rights for the use of sodium-iodine crystals produced by Saint Gobain. At this point it comes as no surprise that DAMA threatens legal actions if the company attempts to breach their "intellectual" property.

There is more stories that make hair on your chest stand on end. One often hears the phrase "a very specific collaboration" when referring to DAMA, which is a roundabout way of saying "a bunch of assholes". Indeed DAMA has worked very hard to earn their bad reputation, and sometimes it's difficult to tell whether at the roots is only paranoia or also bad will. The problem, however, is that history of physics has a few examples of technologically or intellectually less sophisticated experiments beating better competitors - take Penzias and Wilson for example.

So we will never know for sure whether the DAMA signal is real or not until it is definitely refuted or confirmed by another experiment. Fortunately, it seems that the people from Borexino have not given up yet. Recently I heard a talk of Cristiano Galbiati who said that the Princeton group is planning to grow their own sodium-iodine crystals. That will take time, but an advantage is that they will be able to obtain better, more radio-pure crystals, and thus reduce the potassium 40 background by many orders of magnitude. So maybe in two years from now the dark matter will be cleared...

Unlike most dark matter detection experiments, the DAMA instrument has no sophisticated background rejection (they only reject coincident hits in multiple crystals). That might be an asset, because they are a priori sensitive to a variety of dark matter particles, whether scattering elastically or inelastically, whether scattering on nucleons or electrons, and so on. But at the same time most of their hits comes from mundane and poorly controlled sources such as natural radioactivity, which makes them vulnerable unknown or underestimated backgrounds.

One important source of the background is a contamination of DAMA's sodium-iodine crystals

with radioactive elements like Uranium 238, Iodine 129 and Potassium 40. The last one is the main culprit because some of its decay products have the same energy as the putative DAMA signal. Potassium, being in the same Mendeleev column as sodium, can easily sneak into the lattice of the crystal. The radioactive isotope 40K is present with roughly 0.01 percent abundance in natural potassium. Ten percent of the times 40K decays to an excited state of Argon 40, which is followed by a de-excitation photon at 1.4 MeV and emission of Auger electrons with energy 3.2 keV. This process is known to occur in the DAMA detector with a sizable rate; in fact DAMA itself measured that background by looking for coincidences of MeV photons and 3 keV scintillation signals, see the plot above. That same background is also responsible for the little peak at 3keV in the single hit spectrum measured by DAMA, see below (note that this is not the modulated spectrum on which DAMA claim is based!). The peak here is exactly due to the Auger radiation.

with radioactive elements like Uranium 238, Iodine 129 and Potassium 40. The last one is the main culprit because some of its decay products have the same energy as the putative DAMA signal. Potassium, being in the same Mendeleev column as sodium, can easily sneak into the lattice of the crystal. The radioactive isotope 40K is present with roughly 0.01 percent abundance in natural potassium. Ten percent of the times 40K decays to an excited state of Argon 40, which is followed by a de-excitation photon at 1.4 MeV and emission of Auger electrons with energy 3.2 keV. This process is known to occur in the DAMA detector with a sizable rate; in fact DAMA itself measured that background by looking for coincidences of MeV photons and 3 keV scintillation signals, see the plot above. That same background is also responsible for the little peak at 3keV in the single hit spectrum measured by DAMA, see below (note that this is not the modulated spectrum on which DAMA claim is based!). The peak here is exactly due to the Auger radiation.

Now, look at the spectrum of the time dependent component of the signal where DAMA claims to have found evidence for dark matter. The peak of the annual modulation signal occurs precisely at 3 keV.The fact that the putative signal is on top of the known background is VERY suspi

cious.

cious.One should admit that it is not entirely clear what could cause the modulation of the background,

although some subtle annual effect affecting the efficiency for detecting the Auger radiation is not implausible. So far, DAMA has not shown any convincing arguments that would exclude 40K as the origin of their modulation signal.

Actually, it is easy to check whether it's 40K or not. Just put one of the DAMA crystals inside the environment where the efficiency for detecting the decay products of 40K is nearly 100 percent. Like for example, in the Borexino balloon that is waiting next door in the Gran Sasso Laboratory. In fact, the Borexino collaboration has made this very proposal to DAMA. The answer was a resounding no.

There is another way Borexino could quickly refute or confirm the DAMA claim. Why not buying the sodium-iodine crystals directly from Saint Gobain - the company that provided the crystals for DAMA? Not so fast. In the contract, DAMA has secured exclusive eternal rights for the use of sodium-iodine crystals produced by Saint Gobain. At this point it comes as no surprise that DAMA threatens legal actions if the company attempts to breach their "intellectual" property.

There is more stories that make hair on your chest stand on end. One often hears the phrase "a very specific collaboration" when referring to DAMA, which is a roundabout way of saying "a bunch of assholes". Indeed DAMA has worked very hard to earn their bad reputation, and sometimes it's difficult to tell whether at the roots is only paranoia or also bad will. The problem, however, is that history of physics has a few examples of technologically or intellectually less sophisticated experiments beating better competitors - take Penzias and Wilson for example.

So we will never know for sure whether the DAMA signal is real or not until it is definitely refuted or confirmed by another experiment. Fortunately, it seems that the people from Borexino have not given up yet. Recently I heard a talk of Cristiano Galbiati who said that the Princeton group is planning to grow their own sodium-iodine crystals. That will take time, but an advantage is that they will be able to obtain better, more radio-pure crystals, and thus reduce the potassium 40 background by many orders of magnitude. So maybe in two years from now the dark matter will be cleared...

Monday, 5 October 2009

Early LHC Discoveries

It seems that the LHC restart will not be significantly delayed beyond this November. The moment when first protons collide at 7 TeV energy will send particle theorists into an excited state. From day one, we will start harassing our CMS and ATLAS colleagues, begging for a hint of an excess in the data, or offering sex for a glimpse on invariant mass distributions. That will be the case in spite of the very small odds for seeing any new physics during the first months. Indeed, the results acquired so far by the Tevatron make it very unlikely that spectacular phenomena could show up in the early LHC. Although the LHC in the first year will operate at a 3 times larger energy, the Tevatron will have the advantage of 100 times larger integrated luminosity, not to mention the better understanding of their detectors.

Nevertheless, it's fun to play the following game of imagination: what kind of new physics could show up in the early LHC without having been already discovered at the Tevatron? For that, two general conditions have to be satisfied:

The possible couplings of Z' to quarks and leptons can be theoretically constrained: imposing anomaly cancellation, flavor independence, and the absence of exotic fermions at the TeV scale implies that the charges of the new U(1) acts on the standard model fermions as a linear combination of the familiar hypercharge and the B-L global symmetry. Thus, one can describe the parameter space of these Z' models by just three parameters: two couplings gY and gB-L and the Z' mass. This simple parametrization allows us to quickly scan through all possibilities. An example slice of the parameter space for the Z' mass 700 GeV is shown on the picture to the right. The region allowed by the Tevatron searches is painted blue, while the region allowed by electroweak precision tests is pink (the coupling of Z' to the electrons induces effective four-fermion operators that have been constrained by the LEP-II experiment). As you can see, these two constraints imply that both couplings have to be smallish, of order 0.2 at the most, which is even less than the hypercharge coupling g' in the standard model. That in turn implies that the production cross section at the LHC will be suppressed. Indeed, the region where the discovery at the LHC with 7 TeV and 100 inverse picobarns is impossible, marked as yellow, almost fully overlaps with the allowed parameter space. Only a tiny region (red arrow) is left for that particular mass, but even that pathetic scrap is likely to be wiped once the Tevatron updates their Z' analyses.

example slice of the parameter space for the Z' mass 700 GeV is shown on the picture to the right. The region allowed by the Tevatron searches is painted blue, while the region allowed by electroweak precision tests is pink (the coupling of Z' to the electrons induces effective four-fermion operators that have been constrained by the LEP-II experiment). As you can see, these two constraints imply that both couplings have to be smallish, of order 0.2 at the most, which is even less than the hypercharge coupling g' in the standard model. That in turn implies that the production cross section at the LHC will be suppressed. Indeed, the region where the discovery at the LHC with 7 TeV and 100 inverse picobarns is impossible, marked as yellow, almost fully overlaps with the allowed parameter space. Only a tiny region (red arrow) is left for that particular mass, but even that pathetic scrap is likely to be wiped once the Tevatron updates their Z' analyses.

The above example illustrates how difficult is to cook up a model suitable for an early discovery at the LHC. A part of the reason why Z' is not a good candidate is that it is produced by quark-antiquark collisions. That is a frequent occurrence in the proton-antiproton collider like the Tevatron, whereas at the LHC, who is a proton-proton collider, one has to pay the PDF price of finding an antiquark in the proton. An interesting way out that goes under the name of diquark resonance was proposed in another recent paper. If the new resonance carries the quantum numbers of two quarks (rather than quark-antiquark pair) then the LHC would have a tremendous advantage over the Tevatron, as the resonance could be produced in quark-quark collisions that are more frequent at the LHC. Because of that, a large number of diquark events may be produced at the LHC in spite of the Tevatron constraints. The remaining piece of model building is to ensure that the diquark resonance decays to leptons often enough.

tremendous advantage over the Tevatron, as the resonance could be produced in quark-quark collisions that are more frequent at the LHC. Because of that, a large number of diquark events may be produced at the LHC in spite of the Tevatron constraints. The remaining piece of model building is to ensure that the diquark resonance decays to leptons often enough.

Diquarks are not present in the most popular extensions of the standard model and therefore they might appear to be artificial constructs. However, they can be found in somewhat more exotic models like for example the MSSM with a broken R-parity. That model allows for couplings like $u^c d^c \tilde b^c$, where $u^c,d^c$ are right-handed up and down quarks, while $\tilde b^c$ is the scalar partner of the right-handed bottom quark called the (right) sbottom. Obviously, this coupling violates R-symmetry because it contains only one superparticle (in the standard MSSM, supersymmetric particles couple always in pairs). The sbottom could then be produced by collisions of up and down quarks, both of which are easy to find in protons.

Decays of the sbottom are very model dependent: the parameter space of supersymmetric theories is as good as infinite and can accommodate numerous possibilities. Typically, the sbottom will undergo a complex cascade decay that may or may not involve leptons. For example, if the lightest supersymmetric particle is the scalar partner of the electron, then the sbottom can decay into a bottom quark + a neutralino who decays into an electron + a selectron who finally decays into an electron and 3 quarks:

$\tilde b^c -> b \chi^1 -> b e \tilde e -> b e e j j j$

As a result, the LHC would observe two hard electrons plus a number of jets in the final state, something that should not be missed.

To wrap up, the first year at the LHC will likely end up being an "engineering run", where the standard model will be "discovered" to the important end of calibrating the detectors. However, if the new physics is exotic enough, and the stars are lucky enough, then there might be some real excitement store.

Nevertheless, it's fun to play the following game of imagination: what kind of new physics could show up in the early LHC without having been already discovered at the Tevatron? For that, two general conditions have to be satisfied:

- There has to be a resonance coupled to the light quarks (so that it can be produced at the LHC with a large enough cross section) whose mass is just above the Tevatron reach, say 700-1000 GeV (so that the cross section at the Tevatron, but not at the LHC, is kinematically suppressed).

- The resonance has to decay to electrons or muons with a sizable branching fraction (so that the decay products can be seen in relatively clean and background-free channels).

The possible couplings of Z' to quarks and leptons can be theoretically constrained: imposing anomaly cancellation, flavor independence, and the absence of exotic fermions at the TeV scale implies that the charges of the new U(1) acts on the standard model fermions as a linear combination of the familiar hypercharge and the B-L global symmetry. Thus, one can describe the parameter space of these Z' models by just three parameters: two couplings gY and gB-L and the Z' mass. This simple parametrization allows us to quickly scan through all possibilities. An

example slice of the parameter space for the Z' mass 700 GeV is shown on the picture to the right. The region allowed by the Tevatron searches is painted blue, while the region allowed by electroweak precision tests is pink (the coupling of Z' to the electrons induces effective four-fermion operators that have been constrained by the LEP-II experiment). As you can see, these two constraints imply that both couplings have to be smallish, of order 0.2 at the most, which is even less than the hypercharge coupling g' in the standard model. That in turn implies that the production cross section at the LHC will be suppressed. Indeed, the region where the discovery at the LHC with 7 TeV and 100 inverse picobarns is impossible, marked as yellow, almost fully overlaps with the allowed parameter space. Only a tiny region (red arrow) is left for that particular mass, but even that pathetic scrap is likely to be wiped once the Tevatron updates their Z' analyses.

example slice of the parameter space for the Z' mass 700 GeV is shown on the picture to the right. The region allowed by the Tevatron searches is painted blue, while the region allowed by electroweak precision tests is pink (the coupling of Z' to the electrons induces effective four-fermion operators that have been constrained by the LEP-II experiment). As you can see, these two constraints imply that both couplings have to be smallish, of order 0.2 at the most, which is even less than the hypercharge coupling g' in the standard model. That in turn implies that the production cross section at the LHC will be suppressed. Indeed, the region where the discovery at the LHC with 7 TeV and 100 inverse picobarns is impossible, marked as yellow, almost fully overlaps with the allowed parameter space. Only a tiny region (red arrow) is left for that particular mass, but even that pathetic scrap is likely to be wiped once the Tevatron updates their Z' analyses.The above example illustrates how difficult is to cook up a model suitable for an early discovery at the LHC. A part of the reason why Z' is not a good candidate is that it is produced by quark-antiquark collisions. That is a frequent occurrence in the proton-antiproton collider like the Tevatron, whereas at the LHC, who is a proton-proton collider, one has to pay the PDF price of finding an antiquark in the proton. An interesting way out that goes under the name of diquark resonance was proposed in another recent paper. If the new resonance carries the quantum numbers of two quarks (rather than quark-antiquark pair) then the LHC would have a

tremendous advantage over the Tevatron, as the resonance could be produced in quark-quark collisions that are more frequent at the LHC. Because of that, a large number of diquark events may be produced at the LHC in spite of the Tevatron constraints. The remaining piece of model building is to ensure that the diquark resonance decays to leptons often enough.

tremendous advantage over the Tevatron, as the resonance could be produced in quark-quark collisions that are more frequent at the LHC. Because of that, a large number of diquark events may be produced at the LHC in spite of the Tevatron constraints. The remaining piece of model building is to ensure that the diquark resonance decays to leptons often enough.Diquarks are not present in the most popular extensions of the standard model and therefore they might appear to be artificial constructs. However, they can be found in somewhat more exotic models like for example the MSSM with a broken R-parity. That model allows for couplings like $u^c d^c \tilde b^c$, where $u^c,d^c$ are right-handed up and down quarks, while $\tilde b^c$ is the scalar partner of the right-handed bottom quark called the (right) sbottom. Obviously, this coupling violates R-symmetry because it contains only one superparticle (in the standard MSSM, supersymmetric particles couple always in pairs). The sbottom could then be produced by collisions of up and down quarks, both of which are easy to find in protons.

Decays of the sbottom are very model dependent: the parameter space of supersymmetric theories is as good as infinite and can accommodate numerous possibilities. Typically, the sbottom will undergo a complex cascade decay that may or may not involve leptons. For example, if the lightest supersymmetric particle is the scalar partner of the electron, then the sbottom can decay into a bottom quark + a neutralino who decays into an electron + a selectron who finally decays into an electron and 3 quarks:

$\tilde b^c -> b \chi^1 -> b e \tilde e -> b e e j j j$

As a result, the LHC would observe two hard electrons plus a number of jets in the final state, something that should not be missed.

To wrap up, the first year at the LHC will likely end up being an "engineering run", where the standard model will be "discovered" to the important end of calibrating the detectors. However, if the new physics is exotic enough, and the stars are lucky enough, then there might be some real excitement store.

Sunday, 27 September 2009

Resonating dark matter

On this blog I regularly follow the progress in dark matter building. One reason is that next-to-nothing is happening on the collider front: Tevatron invariably confirms the standard model predictions up to a few pathetic 2 point null sigma bleeps now and then. In these grim times particle theorists sit entrenched inside their old models waiting for the imminent LHC assault. The dark matter industry, on the other hand, enjoys a flood of exciting experimental data, including a number of puzzling results that might be hints of new physics.

One of these puzzles - the anomalous modulation signal reported by DAMA - continues to inspire theorists. It is a challenge to reconcile DAMA with null results from other experiment, and any model attempting that has to go beyond the simple picture of elastic scattering of dark matter on nuclei. The most plausible proposal so far is the so-called inelastic dark matter. Last week a new idea entered the market under the name of resonant dark matter. Since this blog warmly embraces all sorts of resonances I couldn't miss the opportunity to share a few words about it.

In the resonant dark matter scenario the dark matter particle is a part of a larger multiplet that transforms under weak SU(2). This means that the dark matter particle (who as usual is electrically neutral) has partners of approximately the same mass that carry an electric charge. Quantum effects split the masses of charged and neutral particles making the charged guys a bit heavier (this is completely analogous to the $\pi_+ - \pi_0$ mass splitting in the standard model). Most naturally, that splitting would be of order 100 MeV; some theoretical hocus-pocus is needed to lower it down to 10 MeV (otherwise the splitting is to large compared to nuclear scales, and the idea cannot be implemented in practice), which is presumably the weakest point in this construction.

Now, when dark matter particles scatter on nuclei in a detector there is a possibility of forming a narrow bound state of the charged partner with an excited state of the nucleus, see the picture. That would imply that the scattering cross-section sharply peaks at a certain velocity corresponding to the resonance.The existence of the resonance is very sensitive to many nuclear parameters: mass, charge, atomic number and the energies of excitation levels. It is conceivable that the resonant enhancement occurs only for one target, say, iodine present in DAMA's sodium-iodine crystals, while it is absent for other targets like germanium, silicon, xenon etc. that are employed in other dark matter experiments. For example, the resonant velocity for these other elements might be outside the range of velocities of dark matter in our galaxy (the escape velocity is some 500 km/s so that there is an upper limit to scattering velocities).

corresponding to the resonance.The existence of the resonance is very sensitive to many nuclear parameters: mass, charge, atomic number and the energies of excitation levels. It is conceivable that the resonant enhancement occurs only for one target, say, iodine present in DAMA's sodium-iodine crystals, while it is absent for other targets like germanium, silicon, xenon etc. that are employed in other dark matter experiments. For example, the resonant velocity for these other elements might be outside the range of velocities of dark matter in our galaxy (the escape velocity is some 500 km/s so that there is an upper limit to scattering velocities).

So, that looks like a perfect hideaway for DAMA, as other experiment would have a hard time exclude the resonant dark matter hypothesis in a model independent way. Fortunately, there is another ongoing dark matter experiment involving iodine: the Korean KIMS based on cesium-iodine crystals. After one year of data taking the results from KIMS combined with those from DAMA put some constraints on the allowed values of the dark matter mass, the position of the resonance and its width, but they leave large chunks of allowed parameter space. More data from KIMS will surely shed more light on the resonant dark matter scenario.